cache

What is a cache?

A cache -- pronounced CASH -- is hardware or software that is used to store something, usually data, temporarily in a computing environment.

It is a small amount of faster, more expensive memory used to improve the performance of recently or frequently accessed data. Cached data is stored temporarily in an accessible storage media that's local to the cache client and separate from the main storage. Cache is commonly used by the central processing unit (CPU), applications, web browsers and operating systems.

Cache is used because bulk or main storage can't keep up with the demands of clients. Cache decreases data access times, reduces latency and improves input/output (I/O). Because almost all application workloads depend on I/O operations, the caching process improves application performance.

How does a cache work?

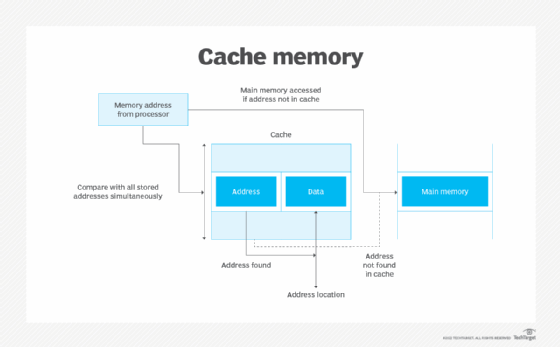

When a cache client attempts to access data, it first checks the cache. If the data is found there, that is referred to as a cache hit. The percent of attempts that result in a cache hit is called the cache hit rate or ratio.

Requested data that isn't found in the cache -- referred to as a cache miss -- is pulled from main memory and copied into the cache. How this is done, and what data is ejected from the cache to make room for the new data, depends on the caching algorithm, cache protocols and system policies being used.

Web browsers like Safari, Firefox and Chrome use browser caching to improve the performance of frequently accessed webpages. When a user visits a webpage, the requested files are stored in a cache for that browser in the user's computing storage.

To retrieve a previously accessed page, the browser gets most of the files it needs from the cache rather than having them resent from the web server. This approach is called read cache. The browser can read data from the browser cache faster than it can reread the files from the webpage.

Cache is important for several reasons:

- The use of cache reduces latency for active data. This results in higher performance for a system or application.

- It diverts I/O to cache, reducing I/O operations to external storage and lower levels of storage area network

- Data can remain permanently in traditional storage or external storage arrays. This maintains the consistency and integrity of the data using features, such as snapshots and Replication, provided by the storage or array.

- Flash is used only for the part of the workload that will benefit from lower latency. This results in the cost-effective use of more expensive storage.

Cache memory is either included on the CPU or embedded in a chip on the system board. In newer machines, the only way to increase cache memory is to upgrade the system board and CPU to the newest generation. Older system boards may have empty slots that can be used to increase the cache memory.

How are caches used?

Caches are used to store temporary files, using hardware and software components. An example of a hardware cache is a CPU cache. This is a small chunk of memory on the computer's processor used to store basic computer instructions that were recently used or are frequently used.

Many applications and software also have their own cache. This type of cache temporarily stores app-related data, files or instructions for fast retrieval.

Web browsers are a good example of application caching. As mentioned earlier, browsers have their own cache that store information from previous browsing sessions for use in future sessions. A user wanting to rewatch a Youtube video can load it faster because the browser accesses it from cache where it was saved from the previous session.

Other types of software that use caches include the following:

- operating systems, where commonly used instructions and files are stored;

- content delivery networks, where information is cached on the server side to deliver websites faster;

- domain name systems, where they can be used to store information used to convert domain names to Internet Protocol addresses; and

- databases, where they can reduce latency in database query

What are the benefits of caches?

There are several benefits of caching, including the following:

- Performance. Storing data in a cache allows a computer to run faster. For example, a browser cache that stores files from previous browsing sessions speeds up access to follow up sessions. A database cache speeds up data retrieval that would otherwise take a good bit of time and resources to download.

- Offline work. Caches also let applications function without an internet connection. Application cache provides quick access to data that has been recently accessed or is frequently used. However, cache may not provide access to all application functions.

- Resource efficiency. Besides speed and flexibility, caching helps physical devices conserve resources. For example, fast access to cache conserves battery power.

What are the drawbacks of caches?

There are issues with caches, including the following:

- Corruption. Caches can be corrupted, making stored data no longer useful. Data corruption can cause applications such as browsers to crash or display data incorrectly.

- Performance. Caches are generally small stores of temporary memory. If they get too large, they can cause performance to degrade. They also can consume memory that other applications might need, negatively impacting application performance.

- Outdated information. Sometimes an app cache displays old or outdated information. This can cause an application glitch or return misleading information. If a website or application gets updated on the internet, using a cached version from a previous session would not reflect the update. This is not a problem for static content but is a problem for dynamic content that changes over sessions or between sessions.

Cache algorithms

Instructions for cache maintenance are provided by cache algorithms. Some examples of cache algorithms include the following:

- Least Frequently Used keeps track of how often a cache entry is accessed. The item that has the lowest count gets removed first.

- Least Recently Used puts recently accessed items near the top of the cache. When the cache reaches its limit, the least recently accessed items are removed.

- Most Recently Used removes the most recently accessed items first. This approach is best when older items are more likely to be used.

Cache policies

Various caching policies determine how the cache operates. Then include the following:

- Write-around cache writes operations to storage, skipping the cache. This prevents the cache from being flooded when there are large amounts of write I/O. The disadvantage to this approach is that data isn't cached unless it's read from storage. As a result, the read operation is slower because the data hasn't been cached.

- Write-through cache writes data to cache and storage. The advantage of write-through cache is that newly written data is always cached, so it can be read quickly. A drawback is that write operations aren't considered complete until the data is written to both the cache and primary storage. This can introduce latency into write operations.

- Write-back cache is like write-through in that all the write operations are directed to the cache. But with write-back cache, the write operation is considered complete after the data is cached. Once that happens, the data is copied from the cache to storage.

With this approach, both read and write operations have low latency. The downside is that, depending on what caching mechanism is used, the data remains vulnerable to loss until it's committed to storage.

What does clearing a cache do and how often should it be done?

Clearing the cache frees memory space on a device. A browser cache uses memory to store files downloaded directly from the web. Clearing it can solve user issues, such as the following;

- A full cache memory can cause applications to crash or not load properly.

- Old caches can contain outdated information and files, causing webpages to not load or load incorrectly. Clearing them can get rid of outdated information.

- Browser caches also contain saved personal information, such as passwords. Clearing them can protect the user.

- Most browser caches can be cleared by going to settings.

A cache should be cleared periodically, but not daily. Clearing the cache too often is not a good use of resources because of these issues:

- the user loses the benefit of quick file access;

- caches delete some files on their own and don't need this sort of maintenance; and

- the computer will cache new files and fill the space up again.

How do you clear a cache?

Browser caches are the ones most end users are familiar with. In most cases, they are cleared by going to a settings or preferences tab or menu item. Those functions contain also contain privacy settings, cookies and history. Users can delete or alter settings from these tabs or menus.

Many computer-based web browsers have key shortcuts to get to these menus fast:

- On Microsoft Windows machines, press Ctrl-Shift-Delete.

- On Apple Macs, press Command-Shift-Delete.

These shortcuts bring the user to the settings menu, which differs slightly from browser to browser.

Here's how to delete the cache on Google Chrome:

- Press Ctrl-Shift-Delete if on a Windows machine, or Command-Shift-Delete on a Mac.

- Scroll to the "Privacy and security" section.

- Select Clear browsing data.

- Choose a time period from the drop-down menu or choose All time to delete the entire cache.

- Click Cached images and files.

- Click Clear data.

Types of caches

Caching is used for many purposes. The various cache methods include the following:

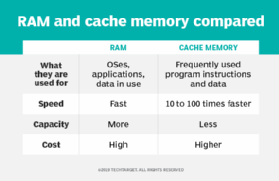

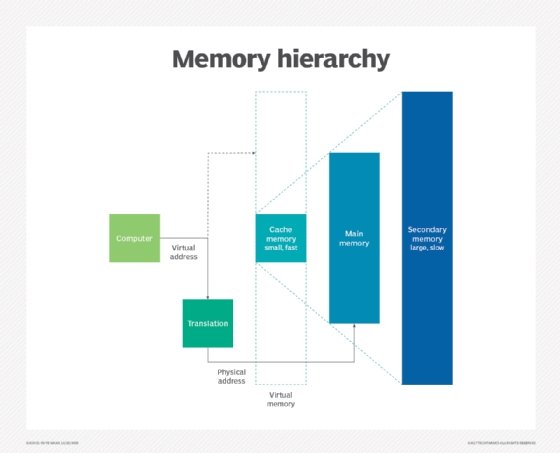

Cache memory is RAM that a microprocessor can access faster than it can access regular RAM. It is often tied directly to the CPU and is used to cache instructions that are accessed a lot. A RAM cache is faster than a disk-based one, but cache memory is faster than a RAM cache because it's close to the CPU.

Cache server, sometimes called a proxy cache, is a dedicated network server or service. Cache servers save webpages or other internet content locally.

CPU cache is a bit of memory placed on the CPU. This memory operates at the speed of the CPU rather than at the system bus speed and is much faster than RAM.

Disk cache holds recently read data and, sometimes, adjacent data areas that are likely to be accessed soon. Some disk caches cache data based on how frequently it's read. Frequently read storage blocks are referred to as hot blocks and are automatically sent to the cache.

Flash cache, also known as solid-state drive caching, uses NAND flash memory chips to temporarily store data. Flash cache fulfills data requests faster than if the cache were on a traditional hard disk drive or part of the backing store.

Persistent cache is storage capacity where data isn't lost in the case of a system reboot or crash. A battery backup is used to protect data or data is flushed to a battery-backed dynamic RAM as extra protection against data loss.

RAM cache usually includes permanent memory embedded on the motherboard and memory modules that can be installed in dedicated slots or attachment locations. The mainboard bus provides access to this memory. CPU cache memory is between 10 to 100 times faster than RAM, requiring only a few nanoseconds to respond to a CPU request. RAM cache has a faster response time than magnetic media, which delivers I/O at rates in milliseconds.

Translation lookaside buffer, also called TLB, is a memory cache that stores recent translations of virtual memory to physical addresses and speeds up virtual memory operations.

Learn how to configure web browsers to avoid web cache poisoning, which is a serious security threat.