pixel - Fotolia

MemVerge pushes big memory computing for every app

MemVerge claims its Memory Machine software layered on Intel Optane DIMMs and DRAM creates petabyte-sized logical pool to power all applications in main memory.

Startup MemVerge is making its "Big Memory" software available in early access, allowing organizations to test-drive AI applications in fast, scalable main memory.

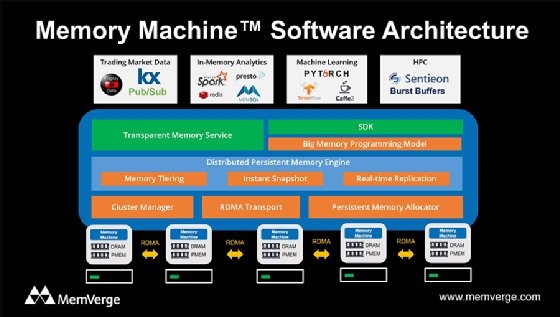

MemVerge's Memory Machine software virtualizes underlying dynamic RAM (DRAM) and Intel Optane devices into large persistent memory lakes. It provides data services from memory, including replication, snapshots and tiered storage.

The vendor, based in Milpitas, Calif., also closed institutional funding of $19 million led by Intel Capital, with participation from Cisco Investments, NetApp and SK Hynix. That adds to $24.5 million MemVerge received from a consortium of venture funds at launch.

The arrival of Intel Optane memory devices drives moves storage class memory (SCM) from a fringe technology to one showing up in major enterprise storage arrays. Other than in-memory databases, most applications are not designed to run efficiently in volatile DRAM. The application code first needs to be rewritten for memory, which does not natively include data services or enable data sharing by multiple servers.

MemVerge Memory Machine at work

Memory Machine software will usher in big memory computing to serve legacy and modern applications and break memory-storage bottlenecks, MemVerge CEO Charles Fan said.

Charles Fan

Charles Fan

"MemVerge Memory Machine is doing to persistent memory what VMware vSphere did to CPUs," he said.

Prior to launching MemVerge, Fan spent seven years as head of VMware's storage business unit. He helped create VMware vSAN hyper-converged software. He previously started file virtualization vendor Rainfinity and sold it to EMC in 2005. MemVerge cofounder Shuki Bruck helped start XtremIO, an early all-flash storage array that now is part of Dell EMC's midrange storage portfolio. Bruck was also a founder of Rainfinity.

MemVerge revised its product since emerging from stealth in 2019. The startup initially planned to virtualize memory and storage in Intel two-socket servers and scale up to 128 nodes. Fan said the company decided instead to offer Memory Machine solely as a software subscription for x86 servers. Financial services, AI, big data and machine learning are expected use cases.

MemVerge plans to introduce full storage services in 2021. That would allow programming of Intel Optane cards as low-latency block storage and tiering of data to back-end SAS SSDs.

"Our first step is to target in-memory applications and memory-intensive applications that have minimal access to storage. And in this case, we intercept all of the memory services and declare a value through the memory interface for existing applications," Fan said.

Phase two of Memory Machine's development will include a software development kit to program modern applications that require "zero I/O persistence," Fan said.

The combination of Intel Optane with Memory Machine vastly increases the byte-addressable storage capacity of main memory, said Eric Burgener, a vice president of storage at IT analysis firm IDC.

"This is super interesting for AI, big data analytics, artificial intelligence and things like that, where you can load a large working set in main memory and run it much faster than [using] used block-addressable NVMe flash storage," Burgener said.

"As long as you have a bunch of Optane cards and the MemVerge software layer running on the server, you can take any application and run it at memory speeds, without rewrites."

Memory as storage: Gaining traction?

The MemVerge release underscores a flurry of new activity surrounding the use of persistent memory for disaggregated compute and storage.

Startup Alluxio in April reached a strategic partnership with Intel to implement its cloud orchestration file software with Intel Optane cards in Intel Xeon Scalable-powered servers. The combination allows disaggregated cloud storage to efficiently use file system semantics, as well as tap into DRAM or SSD media as buffer or page caches, said Alluxio CEO Haoyuan Li said.

Meanwhile, semiconductor maker Micron Technology -- which partnered with Intel to initially develop the 3D XPoint media used in Optane devices -- recently introduced an open source object storage engine geared for flash and persistent memory. Micron said Red Hat is among the partners helping to fine-tune Heterogeneous Memory Storage Engine for upstream inclusion in the Linux kernel.