vege - Fotolia

NVMe-oF storage technology and terms explained

Get to know how NVMe-oF optimizes storage network performance and lowers latency with this roundup of terminology associated with the emerging technology.

Storage has picked up a lot of speed in the last five years with the adoption of NVMe and persistent memory. Those changes have storage network technology scrambling to avoid becoming the bottleneck in a storage system.

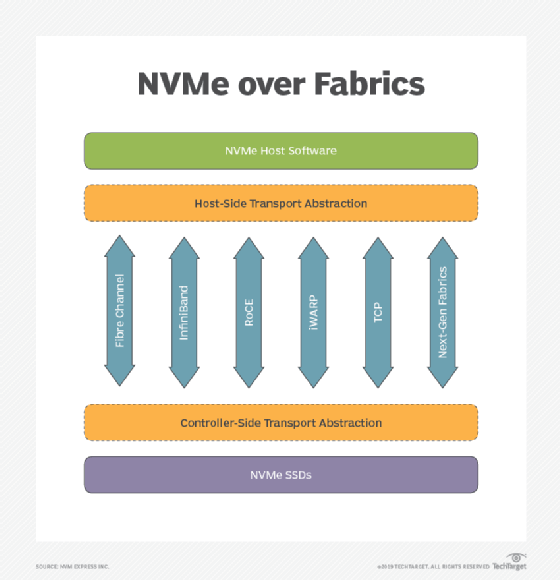

NVMe over fabrics (NVMe-oF) helps prevent these slowdowns, putting the performance and low-latency benefits of NVMe technology on the network to give multiple servers and CPUs access to NVMe storage. The original goal of NVMe-oF for storage was to be within 10 microseconds (µs) of the performance of a local NVMe drive. It's able to do this by encapsulating the NVMe commands and sending them across the network to remote storage drives.

NVMe-oF in the enterprise is expected to take off in the next couple years. However, implementing the technology can be complicated. Achieving the promised performance improvements depends on getting a lot of details right, including the network technology and fabric, the SSDs used and configuring all the parts. What follows are some of the basic terms and technologies you'll need to know for a successful NVMe-oF transition.

DSSD

DSSD is an EMC technology that predated NVMe-oF. It shared a block of flash across the hosts in a rack and was a less expensive approach to low-latency shared storage than competing technologies at the time. In 2017, when NVMe-oF came along and matched or beat DSSD's 100 µs latency for a fifth of the cost, Dell EMC shut down its DSSD development work.

Highly parallel, many-to-many architecture

With the limited queue depth of SAS and SATA drives, I/O gets stacked into a single queue that can become a bottleneck, unable to take advantage of the parallel capabilities of the NAND flash media used in SSDs. NVMe-oF storage changed that, providing 65,535 queues, where each queue can hold as many as 65,535 commands per queue. That compares with SATA and SAS's one queue and 32 and 256 commands per queue, respectively. NVMe-oF's queue depth makes it possible to design a highly parallel, many-to-many architecture between the host and drive, with a separate queue for each host and drive.

NVMe storage

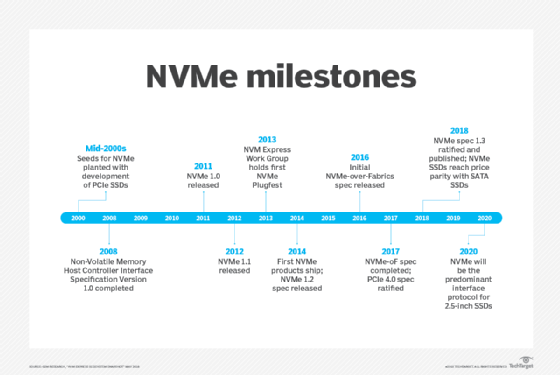

Non-volatile memory express (NVMe) is an open standard designed as an alternative to the SCSI protocol and the latency-inducing bottlenecks resulting from using SCSI-based technology with flash storage. NVMe is a logical device interface specification that uses controllers and drivers developed specifically for SSDs. It connects to hosts via a PCI Express (PCIe) bus. Because PCIe connects directly to the CPU, it enables memory-like access to the processor, reducing I/O overhead and allowing devices to take advantage of the speed, performance and parallelism of SSDs. A driver developed specifically for NVMe devices also lets them take advantage of the PCIe interface's faster access speed.

NVM Express Inc.

NVM Express was formed in 2014 as the nonprofit consortium responsible for the development of the NVMe specification. The group has more than 100 members and focuses on defining, managing and promoting NVMe as an industrywide standard to provide high-bandwidth and low-latency storage access to SSDs. The latest version of the NVMe specification, version 1.4, was released July 23, 2019. The group is also responsible for developing the NVMe-oF spec to use NVMe commands over a networked fabric and the NVMe Management Interface that facilitates the management of NVMe SSDs in servers and storage systems. The latest version of the NVMe-oF spec, version 1.1, was released Oct. 22, 2019.

NVMe integration testing

Figuring out whether NVMe-oF products are compatible with one another can be a challenge. Fortunately, the University of New Hampshire InterOperability Laboratory (UNH-IOL) serves as a clearinghouse of information on network devices and the network standards they support. After more than two years of testing, UNH-IOL has compiled list of standards-compliant products that covers NVMe host platforms, drives, switches and management interfaces, along with NVMe-oF storage hardware and software targets, initiators and switches.

NVMe over FC

The early iterations of the NVMe-oF specification supported Fibre Channel (FC) and remote direct memory access (RDMA). With both of these transport types, data gets transferred from the host to the array and back using a zero-copy process or, in the case of NVMe over FC, a nearly zero-copy process, in which data doesn't get copied between memory buffers and I/O cards multiple times. This approach reduces the CPU load and latency of data transfers and enhances performance. With FC's credit buffer congestion control system, data isn't transmitted until a buffer allocation is received, ensuring reliable lossless transport. FC minimizes latency and host CPU use by having host bus adapters (HBA) offload the FC Protocol (FCP). Any 16 Gbps or 32 Gbps FC HBA or switch will support NVME over FC, while still providing SCSI over FCP services.

NVMe over RDMA

The NVMe-oF spec's support for RDMA fabrics covers InfiniBand, RDMA over Converged Ethernet (RoCE) and the Internet Wide Area RDMA Protocol (iWARP). RDMA enables networked computers to exchange data in main memory without involving the processor, cache or OS of either computer. It frees up resources, improving throughput and performance. Implementing NVMe over RDMA can be complex. It requires special configurations, such as enabling priority flow control and explicit congestion notification to eliminate dropped packets. It also can require specialized equipment, such as RDMA-enabled network interface cards.

NVMe-oF performance monitoring

In the past, a poorly configured network switch, port or adapter could easily be ignored or go undetected in a storage system, because the latency it added paled in comparison to that from storage applications and devices. This changed with the advent of NVMe-oF. Now, networks are struggling to keep up with the storage hardware and software. This flip has made monitoring NVMe-oF performance a critical part of storage management to ensure the network configuration delivers maximum performance. Storage networks must be proactively checked to ensure network design and resource use can keep up with I/O requirements. NVMe-oF performance monitoring relies on real-time telemetry data capture for network visibility, along with tools to identify and fix problems before they occur.

NVMe/TCP

The 1.1 revision of the NVMe-oF spec added support for the Transmission Control Protocol (TCP) transport binding, which drives the internet and is one of the most common transports worldwide. NVMe/TCP enables the use of NVMe-oF across a standard Ethernet network without requiring configuration changes or special equipment. It can also be used across the internet. NVMe/TCP defines how to map TCP-based communications between NVMe-oF hosts and controllers across an IP network. A messaging and queuing model specifies the communication sequence.

Scale-out storage architecture

Scaling out expands storage space through the addition of connected arrays, extra servers or nodes to increase storage capabilities. Until NVMe-oF arrived, scale-out storage was complex to implement. NVMe-oF provides a direct path between a host and storage media, eliminating the need to force data through a centralized controller, a latency-inducing process that limits the ability to fully use the capabilities of SSDs. The direct path enables hosts to interact with many drives, reducing latency and increasing scale-out capacity.

Transport binding specification

NVMe-oF is not tied to any one storage transport protocol because the protocol is disaggregated from the transport layer it runs on, called the transport binding spec. Binding specs have been developed for RDMA, including InfiniBand, RoCE and iWARP; Fibre Channel; and, most recently, TCP. NVMe-oF can run on any network transport that has a binding spec.