Fotolia

NVMe speeds vs. SATA and SAS: Which is fastest?

The NVMe protocol is tailor-made to make SSDs fast. Get up to speed on NVMe performance and how it compares to the SATA and SAS interfaces.

The NVMe protocol has become the industry standard for supporting solid-state drives and other non-volatile memory subsystems. NVMe speeds are substantially better than those of traditional storage protocols, such as SAS and SATA.

The non-volatile memory express standard is based on the NVM Express Base Specification published by NVM Express Inc., a nonprofit consortium of tech industry leaders. The consortium maintains a set of specifications that define how host software communicates with non-volatile memory across supported transports. As of NVMe 2.0, the set includes the following specifications:

- NVMe Base Specification

- NVMe Command Set specifications (NVM Command Set, Zoned Namespaces Command Set and Key Value Command Set)

- NVMe Transport specifications (NVMe over PCIe Transport, RDMA Transport and TCP Transport)

- NVMe Management Interface specification

The NVM Express Base Specification defines both a storage protocol and a host controller interface optimized for client and enterprise systems that use SSDs based on PCIe. PCIe is a serial expansion bus standard that enables computers to attach to peripheral devices.

A PCIe bus can deliver lower latencies and higher transfer speeds than older bus technologies, such as the PCI or PCI Extended (PCI-X) standards. With PCIe, each bus has its own dedicated connection, so they don't have to compete for bandwidth.

Expansion slots that adhere to the PCIe standard can scale from one to 32 data transmission lanes. The standard defines seven physical lane configurations: x1, x2, x4, x8, x12, x16 and x32. The configurations are based on the number of lanes; for example, an x8 configuration uses eight lanes. The more lanes there are, the better the performance -- and the higher the costs.

The PCIe version is another factor that affects performance. In general, each version doubles the bandwidth and transfer rate of the previous version, so the more recent the version, the better the performance. For instance, PCIe 3.0 delivers a bandwidth of 1 GBps per lane; PCIe 4.0 doubles that bandwidth to 2 GBps; and PCIe 5.0 doubles the bandwidth again to 4 GBps.

What is an SSD?

A solid-state drive is a type of non-volatile storage device for persisting electronic data. Unlike a hard disk drive or magnetic tape, the SSD contains no moving parts that can break or fail. Instead, an SSD uses silicon microchips to store data, so it requires less power and produces less heat.

Most of today's SSDs are based on flash memory technology. Each device includes a flash controller and one or more NAND flash memory chips.

NVMe speeds and performance

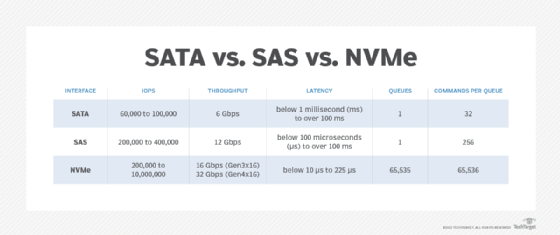

NVMe was developed from the ground up specifically for SSDs to improve throughput and IOPS, reduce latency and increase speeds. NVMe-based drives can theoretically attain throughputs up to 32 GBps, assuming the drives are based on PCIe 4.0 and use 16 PCIe lanes. Today's PCIe 4.0 SSDs tend to be four-lane devices with throughputs closer to 7 GBps. Despite some drives reaching only 200,000 IOPS, many hit well over 500,000 IOPS, with some as high as 10 million. At the same time, latency rates continue to drop; many drives achieve rates below 20 microseconds (µs) and some below 10.

That said, metrics that measure NVMe SSD speeds, such as throughput or transfer rate, can vary widely. These figures are trends, rather than absolutes, considering the technology's dynamic nature. Factors such as workload type -- write vs. read or random vs. sequentially -- can make a significant difference in the maximum NVMe speeds. Even so, it's clear that NVMe significantly outperforms protocols such as SAS and SATA on every front, especially when used with PCIe 4.0.

NVMe uses a more streamlined command set to process I/O requests, which requires fewer than half the number of CPU instructions as those generated by SATA or SAS. NVMe also has a more extensive and efficient system for queuing messages. For example, SATA and SAS each support only one I/O queue at a time. The SATA queue can contain up to 32 outstanding commands, and the SAS queue can contain up to 256. NVMe can support up to 65,535 queues and up to 64,000 commands per queue.

This queuing mechanism lets NVMe make better use of the parallel processing capabilities of an SSD, something the other protocols cannot do. In addition, NVMe uses remote direct memory access over the PCIe bus to map I/O commands and responses directly to the host's shared memory. This reduces CPU overhead even further and improves NVMe speeds. As a result, each CPU instruction cycle can support higher IOPS and reduce latencies in the host software stack.

SAS and SATA speeds and performance

SAS and SATA are common protocols to facilitate connectivity between host software and peripheral drives. The SATA protocol is based on the Advanced Technology Attachment standard, and the SAS protocol is based on the SCSI standard.

The SATA and SAS protocols were developed specifically for HDD devices. Although SAS is generally considered to be faster and more reliable, both protocols can easily handle HDD workloads. If a system runs into storage-related roadblocks, it is often because of the drive itself or other factors, not because of the protocol.

SSDs have changed this equation. Their higher IOPS can quickly overwhelm the older protocols, which causes them to reach their limits before they can take full advantage of the drive's performance capabilities.

The older protocols don't perform nearly as well on SSDs. SATA and SAS each support only one I/O queue at a time and those queues can contain only a small number of outstanding commands compared to NVMe.

In addition, today's SATA-based drives can attain throughputs of only 6 Gbps, with IOPS topping out at about 100,000. Latencies typically exceed 100 µs, although some newer SATA-based SSDs can achieve much lower latencies. SAS drives deliver somewhat better performance; they provide throughputs up to 12 Gbps and IOPS averaging between 200,000 and 400,000. Even so, lower IOPS are not unusual. In some cases, SAS latency rates have fallen below 100 µs, but not by much.

NVMe 2.0

The NVMe 2.0 specifications define a number of new and enhanced features to support the emerging NVMe device environment but maintain compatibility with previous versions. The 2.0 specifications include feature and management updates that make it possible to use NVMe with rotational media such as HDDs.

NVMe 2.0, released in 2021, added two command set specifications. The Zoned Namespaces Command Set defines a storage device interface that enables a host and SSD to collaborate on data placement. This helps align the data with the SSD's physical media, which improves performance and resource use. The Key Value Command Set enables applications to use key-value pairs to communicate directly with an SSD, which avoids the overhead of translation tables between keys and logical blocks.

NVMe 2.0 added several other important features and updated many existing ones. For example:

- Namespace Types enables an SSD controller to support different NVMe command sets.

- NVMe Endurance Group Management improves SSD control by enabling media to be configured into endurance groups and NVM sets, which exposes granularity of access to the SSD.

- Multiple Controller Firmware Update defines the behavior for updating firmware on a domain that contains multiple controllers.

- Command Group Control protects a system against unintentional or malicious changes after it's been provisioned.

- Command and Feature Lockdown enables host and management controls to prevent the execution of certain commands.

- A simple copy command lets a host copy data from multiple logical block ranges to a single consecutive logical block range.

The NVMe 2.0 specifications also enhance telemetry, include capabilities that enable large-scale environments with different domains, define a new identity structure that can span multiple namespaces and update the Command Effects Log.

NVMe 1.3 and NVMe 1.4

In 2019, the NVM Express group released the NVMe 1.4 specification. It built on and improved NVMe 1.3, which was released in 2017. NVMe 1.4 introduced several new and enhanced features. For example, the revised specification defined a PCIe persistent memory region where contents persist across power cycles. It defined a predictable latency mode that enables a well-behaved host to achieve a deterministic read latency.

NVMe 1.4 also made it possible to implement a persistent event log in NVMe subsystems, report asymmetric namespace access characteristics to the host and enable the host to associate an NVM set and I/O submission queue. The revision added a verification command to check the integrity of stored data and metadata. It defined performance and endurance hints that allow the controller to specify preferred granularities and alignments for write and deallocate operations.

NVMe 1.4 updated many existing features. It enhanced the host memory buffer and enabled write streams to be shared across multiple hosts. The specification also defined a controller mechanism for communicating namespace allocation granularities to the host and made it possible to prevent deallocation after a sanitize operation.

NVMe over fabrics

In June 2016, the NVM Express consortium published the NVMe over fabrics (NVMe-oF) specification, which extended NVMe's benefits across network fabrics such as Ethernet, InfiniBand and Fibre Channel. The consortium estimated that 90% of the NVMe-oF specification was the same as the NVMe specification. The primary difference between the two was the way the protocols handled commands and responses between the host and the NVM subsystem.

In October 2019, NVM Express released the NVMe-oF 1.1 specification, which added support for the TCP transport binding. NVMe over TCP made it possible to use NVMe-oF on standard Ethernet networks without making hardware or configuration changes. The consortium has no current plans for further NVMe-oF development. The content has instead been rolled into version 2.0 of the NVM Express Base specification.

Purchasing considerations

One of the biggest SSD purchasing considerations is whether to select drives based on the SATA, SAS or NVMe protocol. Most enterprise data centers favor NVMe over the other two because of its superior performance.

If decision-makers opt for NVMe, they should consider four important factors, specific to PCIe:

- PCIe version. Each new generation of the PCIe specification brings with it greater performance, so organizations should try to opt for SSDs that adhere to the most recent version.

- PCIe lane count. Most NVMe SSDs are limited to four PCIe lanes, but not all. The more lanes that a drive uses, the better the throughout.

- SSD form factor. PCIe SSDs come in four form factors: M.2, U.2, add-in cards and EDSFF. Decision-makers should consider factors such as budget, host location of the drives and amount of available space. EDSFF is an emerging technology that offers several benefits over other form factors in terms of performance, capacity and scalability.

- Storage environment. The hardware that houses the SSDs should support the same PCIe version as the drives to realize the greatest benefits. For example, if users run a PCIe 4.0 SSD on a PCIe 3.0 server, the drive will run at PCIe 3.0 speeds, not PCIe 4.0.

Podcast: NVM Express and NVMe-oF

Demartek Founder Dennis Martin explains NVM Express and NVMe-oF and why data storage administrators need to keep an eye on these protocols.