High-performance interconnects and storage performance

Should you use InfiniBand or RDMA over Converged Ethernet as an interconnect? We discuss each technology's effect on performance features such as latency, IOPS and throughput.

Storage sharing has been an important aspect of storage cost control and performance optimization for more than two decades. The goal has been to squeeze the best performance for the lowest cost out of the shared storage.

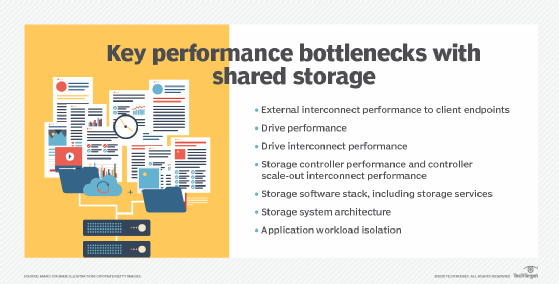

There are essentially four types of shared storage -- block, file, object and parallel file -- commonly found in high-performance computing (HPC) environments. And while each approach must solve multiple storage bottlenecks to achieve its optimal performance, this article focuses on issues related to external interconnect performance to client endpoints.

The role of high-performance interconnects with storage

The market is currently caught up in the hype of NVMe-oF as the key to high-performance interconnect issues. NVMe-oF is the NVMe block protocol that takes advantage of remote direct memory access (RDMA) in the different interconnect technologies. The primary purpose of NVMe-oF is to connect applications to a storage system as if it's a local NVMe flash or persistent memory drive. RDMA reduces latency by ensuring the receiving target has enough free memory for the data being transferred. Front latency is important for transactions.

An example of a system that takes advantage of RDMA between the application servers and target storage can be found within the Oracle engineered hyper-fast Exadata database appliance. It uses RDMA between the database servers and the storage servers within the turnkey appliance. Another example is from Excelero, running between the application servers and the target storage. In this case, Excelero bypasses the storage CPU and goes directly to the drives within the storage system. Each of these examples demonstrates the value of using RDMA, but not necessarily NVMe-oF. Pavilion Data uses NVMe-oF between application servers and its storage system. Each of these approaches provides exceptionally low latencies. NVMe-oF is supported on Ethernet, Fibre Channel (FC), InfiniBand and TCP/IP. Currently, the lowest latencies are on InfiniBand, Ethernet and FC. TCP/IP adds some latency.

Obviously, a 100 Gbps or 200 Gbps Ethernet network interface card will have better performance than a 10 Gbps NIC. Both InfiniBand and Ethernet can achieve up to 600 Gbps throughput (on 12 links) and they have future specifications that can get five times that throughput. But it's important to understand that a 200 Gbps NIC will saturate a PCIe gen 4 bus. Therefore, multiple 200 Gbps NICs or NIC ports won't double performance, nor will a 400 Gbps NIC.

FC -- which tops out at 32 Gbps and has future specs of 128 Gbps -- has fallen behind in the bandwidth high-performance game. There are few new FC customers, but the installed enterprise base is still projected to grow for several more years. Nevertheless, it's been relegated to a legacy interconnect.

Should your high-performance interconnects use InfiniBand or RoCE?

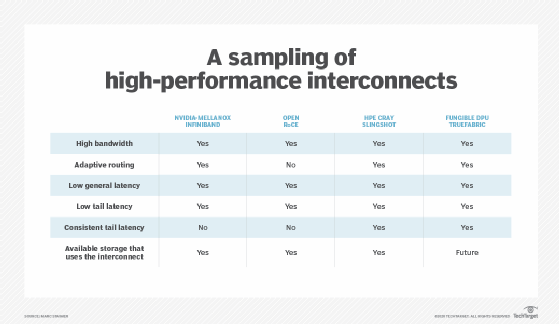

Going forward, most high-performance interconnect implementations are focusing on InfiniBand or RDMA over Converged Ethernet (RoCE). So how do the two protocols compare?

Architecture

InfiniBand architecture specifies how RDMA works in an InfiniBand network, while RoCE does the same for Ethernet. InfiniBand RDMA is in the adapter silicon. RoCE may be in adapter or NIC silicon, depending on the vendor. There are two official RoCE versions: RoCE v1 and RoCE v2. RoCE v1 encapsulates InfiniBand transport packets over Ethernet with Ethernet frame limitations of 1,500 bytes or 9,000 bytes for jumbo frames. RoCE v2 rides on top of the user datagram protocol (UDP).

RoCE originally used InfiniBand verbs -- functions and methods offered by an InfiniBand API. The latest implementations also take advantage of Libfabric, which is used by Amazon Web Services, Cisco Systems, Juniper Networks and Microsoft Azure.

Bandwidth

Both InfiniBand and Ethernet support bandwidth up to 400 Gbps. InfiniBand is an open standard, but it's currently only provided by Mellanox, which Nvidia recently acquired. Ethernet, an open standard from the Ethernet Technology Consortium, is supported by many vendors, including Arista Networks, Atto Technology, Broadcom, Chelsio Communications, Cisco Systems, Dell Technologies, Hewlett Packard Enterprise (HPE), Huawei, Intel, Juniper Networks, Marvell Technology Group's Cavium, Mellanox Technologies and Siemens. Mellanox is a key player in both InfiniBand and Ethernet high-performance interconnects, having recognized several years ago that it needed to avoid being limited to a potential niche.

Ethernet has always been a player in high-performance interconnect technology, but InfiniBand has done better because of its lower latencies and costs. However, Ethernet has started to erode those advantages. There are few storage systems with an external InfiniBand interface -- DataDirect Networks, HPE/Cray, IBM, Panasas, Pavilion Data Systems and WekaIO -- and fewer still that use InfiniBand as an internal interconnect.

Latency

InfiniBand has historically had the advantage over Ethernet with latency in both adapters and switching. This is because many aspects of InfiniBand networking were offloaded in the InfiniBand silicon and weren't in the Ethernet silicon. In fact, the offloading is called out in the InfiniBand specifications. Ethernet vendors have been closing that gap in recent years, especially in NICs where most of the latency occurs. Vendors such as Chelsio, and to a lesser extent Mellanox, have done much to narrow the latency gap.

InfiniBand has had an order of magnitude better latency than Ethernet switches. But that gap has narrowed significantly on Ethernet with the latest high-performance switches from vendors such as Cisco and Juniper Networks reducing that advantage by a factor of five to just about two times.

Based on bandwidth and latency, InfiniBand appears to have an advantage over RoCE. But there are other factors, such as congestion and routing, that affect high-performance interconnects.

Congestion

Congestion occurs in both layer 2 fabrics and layer 3 interconnect networks. In layer 2 fabrics -- such as FC, InfiniBand and RoCE -- the congestion is called a hot spot, which occurs when there's too much traffic over a specific route or port. A hot spot can negatively affect performance by blocking or slowing traffic. When experiencing congestion, layer 2 fabrics have been designed to not drop packets, while layer 3 networks will drop packets.

InfiniBand controls congestion using two different frame relay messages: forward explicit congestion notification (FECN) and backward explicit congestion notification (BECN). FECN notifies the receiving device when there's network congestion while BECN notifies the sending device. InfiniBand combines FECN and BECN with adaptive marking rate to reduce congestion. It offers coarse-grain congestion control because it penalizes everyone experiencing the congestion, not just the offending system.

Congestion control on RoCE uses explicit congestion notification (ECN), which is an extension of IP and TCP that enables endpoint network congestion notification without dropping packets. ECN puts a mark on the IP header to tell the sender there's congestion -- as if packets were dropped -- instead of dropping the packets. With non-ECN congestion communication, dropped packets require retransmission. ECN reduces packet drops by a congested TCP connection, avoiding retransmission. Fewer retransmissions reduces latency and jitter, providing better transactional and throughput performance. However, ECN also provides coarse-grain congestion control with no apparent advantages over InfiniBand.

Routing

When congestion or hot spots in the fabric or network occur, adaptive routing sends devices over alternate routes to relieve congestion and speed delivery. The latest Mellanox InfiniBand switches use adaptive routing notification and a technique Mellanox calls self-healing interconnect enhancement for intelligent data centers (Shield) or fast link fault recovery.

The InfiniBand adaptive routing enables the InfiniBand switch to select an output port based on the port's load, assuming there are no constraints on output port selection. Shield enables the switch to select an alternative output port should the output port in the linear forwarding table not be in an armed or active state and fails.

RoCE v2 runs on top of IP. IP has been routable for decades with advanced routing algorithms and now with AI machine learning that can predict congested routes and automatically send packets over faster ones. When it comes to routing, Ethernet and RoCE v2 have significant advantages.

However, neither InfiniBand nor RoCE do much for tail latency, which is the latency of the last packet instead of the first. Tail latency is very important to HPC messaging applications for synchronization, and some product innovations are starting to address that.

The future of high-performance interconnect technology

There are several vendors -- HPE Cray, Fungible and a stealth startup that can't be named at this time -- working on next-generation high-performance interconnect innovations. Let's explore some of the work they are doing to enhance Ethernet and RoCE.

HPE Cray

Part of HPE's 2019 acquisition of Cray included a high-performance interconnect based on standard Ethernet called Slingshot. HPE Cray developed Slingshot to provide better performance for its Shasta HPC supercomputer and ClusterStor E1000, its Lustre-based storage and connection to Shasta.

Slingshot uses standardized RDMA over Ethernet while adding a superset that HPE Cray labels HPC Ethernet. This superset is architected for the needs of HPC traffic and optimized for the small packet messaging prevalent in HPC applications and the message interface protocol. HPC Ethernet runs on top of UDP/IP and concurrently with all other Ethernet traffic. HPE Cray provides switches based on a unique application-specific integrated circuit (ASIC) that has highly advanced adaptive routing and congestion control. The combination enables high-performance networks that scale to more than 270,000 endpoints with no more than three hops between them. Slingshot supports all switch networking topologies, including Dragonfly.

The key to Slingshot is its exceptional performance on latency and especially tail latency under load. This was verified by Slingshot's results on the standardized Global Performance and Congestion Network Tests (GPCNeT). Slingshot showed no difference in average unloaded latency and average latency, with congestion at approximately 1.8 microseconds (µs). Tail latency congestion was more impressive, with 99% of the packets lower than 8.7 µs. Standard RoCE and InfiniBand on the same GPCNeT testing typically have on average 10 to 20 times more tail latency, and the latency is more inconsistent.

Slingshot congestion control and adaptive routing is switch based. This enables Slingshot to potentially work with a variety of 100/200 Gbps Ethernet NICs. Current implementations use Nvidia Mellanox ConnectX-5 NICs. The adaptive routing is fine-grained and penalizes only the parties causing the congestion, with no packets dropped. Unfortunately, as of 2020, Slingshot is only available and sold as part of the HPE Cray Shasta HPC supercomputer and ClusterStor storage when sold with or for a Shasta supercomputer.

Fungible

Fungible is a well-funded startup with more than 200 employees. The co-founders are Pradeep Sindhu, former founder of Juniper Networks, and Bertrand Serlet, former founder of Upthere (acquired by Western Digital) and previously senior vice president of software engineering at Apple.

Fungible has developed an ASIC architected to solve CPU problems with data-intensive processing, such as high-performance networking and storage. The processor is called a data processing unit (DPU). It isn't a networking offload as seen in NICs from Nvidia Mellanox and Chelsio, but rather is designed to facilitate and accelerate IP data packet processing and movement at extremely high speeds. It comes with endpoint-based congestion control and adaptive routing that works with any standard high-performance Ethernet switch. Like Slingshot, it minimizes hops and latency on an ongoing basis. Unlike Slingshot, the ASICs must live on the initiator and target NICs. Fungible has developed one ASIC for initiators and another with more throughput for storage targets. Network scalability is predicted to be in the hundreds of thousands of endpoints.

Fungible has also developed software, called TrueFabric, that runs on top of UDP/IP and goes with its DPUs. Fungible maintains that the DPU and its TrueFabric software are designed to pool resources at scale and reduce the resources required to meet extreme storage performance, including the number of switches and storage resources. The company also claimed that the ASIC and software will reduce latency and tail latency between nodes, as well as between nodes and storage, while delivering consistent predictive latencies and increased reliability and security. These claims are based on predictive algorithms. A word of caution, however. Until there are Fungible DPU products available with repeatable benchmark testing, these claims are intriguing but unverified. But based on the pedigree of the founders, it's worth exploring.

Unnamed stealth startup

There is one more startup in the high-performance interconnect market, but it's currently in stealth mode and unable to publicly discuss its plans. The company is developing an approach to high-performance interconnects with a completely switchless architecture that also scales into the tens of thousands to hundreds of thousands of endpoints, with congestion control and adaptive routing.

What makes this highly intriguing is the complete elimination of switches. Expect to see this available in the first half of 2021.