NVMe (non-volatile memory express)

What is NVMe (non-volatile memory express)?

NVMe (non-volatile memory express) is a transfer protocol created to accelerate the transfer speed of data in solid-state storage devices. NVMe is used in both enterprise and client systems.

As solid-state technology became a preferred medium in the storage market, it quickly became clear that existing interfaces and protocols -- such as Serial ATA (SATA) and Serial-Attached SCSI (SAS) -- were no longer suitable in data center environments. In early 2011, the initial NVMe spec was released, with nearly 100 technology companies involved in its development.

NVMe is commonly used for solid-state storage, main memory, cache memory or backup memory. NVMe works by communicating between a storage interface and a system central processing unit (CPU) through a high-speed Peripheral Component Interconnect Express (PCIe) bus. The NVMe protocol was designed for use with fast media. The main advantages of NVMe-based PCIe solid-state drives (SSDs) over other storage types are reduced latency and high input/output operations per second (IOPS).

NVMe has also been a key enabler of evolving technologies and applications such as the internet of things, artificial intelligence and machine learning, which can all benefit from the low-latency and high-performance improvements provided by NVMe-attached storage.

This article is part of

Flash memory guide to architecture, types and products

How does NVMe work?

The logic for NVMe is stored in and executed by an NVMe controller chip, which is physically located in the used storage media. To transfer data, the NVMe protocol enables SSDs to connect directly to the CPU through a PCIe bus.

The NVMe standard defines a register interface, command set and collection of features for PCIe-based SSDs with the goal of high performance and interoperability across a broad range of NVM systems.

The NVMe protocol can support any form of NVM, including NAND flash-enabled SSDs. NVMe reference drivers are available for a variety of operating systems, including Windows and Linux.

An NVMe SSD connects through a PCIe bus and an M.2 or U.2 connector. The NVMe protocol attached to these connections enables lower latency and higher IOPS, along with a reduction in power use.

NVMe commands map input/output (I/O) and responses to shared memory in a host computer over a PCIe interface. The NVMe interface supports parallel I/O with multicore processors to facilitate high throughput and address CPU bottlenecks.

Why is NVMe important?

Designed for high-performance non-volatile storage media, NVMe is well suited for highly demanding, compute-intensive settings. For example, NVMe can handle enterprise workloads while leaving a smaller infrastructure footprint and consuming less power than other storage types, including SAS and SATA.

There's a large performance difference between NVMe, SAS and SATA. For example, NVMe experiences much less latency than SAS and SATA protocols. This means that NVMe can be used with workload-intensive applications that need real-time processing while avoiding bottlenecks.

Enterprise environments and data centers benefit from the high performance granted by NVMe-based storage.

What are NVMe's use cases?

Because of its performance and ability to handle a high number of queues and commands, NVMe is suitable for the following:

- Professional and prosumer use, handling tasks such as graphics editing.

- Applications with large queue depths for storage I/O, including databases and some web operations.

- High-performance computing, specifically in applications where low latency is critical.

- Relational databases where the performance of NVMe flash memory systems lowers the number of physical servers needed.

- Applications that need to retrieve or store data in real time, such as finance and e-commerce apps.

What are the benefits of NVMe?

Benefits of NVMe include the following:

- NVMe drives can send commands twice as fast compared with Advanced Host Controller Interface and SATA drives.

- PCIe sockets can transfer much more data than SATA drives.

- NVMe SSDs have a latency of only a few microseconds, while SATA SSDs have a latency between 30 and 100 microseconds.

- NVMe offers efficient storage, management and data access.

- It has a much higher bandwidth compared with SATA and SAS.

- NVMe supports multiple form factors, including M.2 and U.2 connectors.

- It can streamline command sets to parse data efficiently.

- NVMe supports tunneling protocols for privacy.

- It is power-efficient, as NVMe-enabled SSDs on standby mode consume about 0.0032 watts compared with an active NVMe, which consumes about 0.08 watts.

- NVMe works with all major operating systems, and in the M.2 form factor, it's compatible with mobile devices such as laptops.

- NVMe SSDs provide faster game booting, loading times and installation times for gaming consoles.

What are the drawbacks of NVMe?

Potential disadvantages of NVMe include the following:

- A lack of support for legacy systems.

- Not cost-effective at storing large volumes of data. NVMe is more expensive based on storage capacity when compared with already well-established spinning drives.

- Typically used with the M.2 format, which can further limit drive selection.

What are the differences between SATA, NVMe and SAS?

SATA. SATA is a communication protocol developed for computers to interact with hard disk drive storage systems. Introduced in 2000, SATA superseded parallel ATA and quickly became the ubiquitous storage system protocol for computers. Over the years, revisions to the spec have been revved up to run at 6 gigabits per second (Gbps) with an effective throughput of up to 600 megabytes per second.

Although SATA was developed for hard disk technology with mechanical spinning platters and actuator-controlled read/write heads, early SSDs were marketed with SATA interfaces to take advantage of the existing SATA ecosystem. It was a convenient design and helped accelerate SSD adoption, but it wasn't the ideal interface for NAND flash memory and increasingly became a system bottleneck.

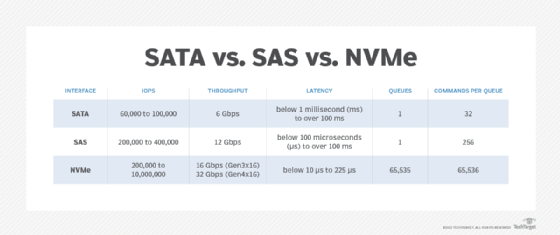

SATA supports up to 100,000 IOPS, 6 Gbps of throughput, less than 1 millisecond of latency, one queue and 32 commands per queue. NVMe supports up to 10 million IOPS, 16 Gbps of throughput, less than 10 microseconds of latency, 65,535 queues and 65,536 commands per queue.

NVMe. Designed for flash, NVMe's speed and low latency leave SATA in the dust and enable much higher storage capacities in smaller form factors such as M.2. Generally, NVMe performance parameters outdistance those of SATA by five times or higher.

SATA is more established, with a long history and lower implementation costs than NVMe, but it is hard disk technology that has been retrofitted with more modern storage media.

SAS. NVMe supports 64,000 commands in a single message queue and a maximum of 65,535 I/O queues. By contrast, a SAS device's queue depth typically supports up to 256 commands, and a SATA drive supports up to 32 commands in one queue.

Also, high-end enterprise NVMe SSDs can consume more power than SAS or SATA SSDs. The SCSI Trade Association claims that the more mature SAS SSDs offer additional advantages over NVMe PCIe SSDs, such as greater scalability, hot pluggability and time-tested failover capabilities. NVMe PCIe SSDs can also provide a level of performance that many applications don't require.

History and evolution of NVM Express

The Non-Volatile Memory Host Controller Interface Work Group began developing the NVMe specification in 2009 and published the 1.0 version on March 1, 2011. This specification included the queuing interface, NVM command set, administration command set and security features. Other noteworthy dates include the following:

- Nov. 8, 2012. The NVM Express Work Group released NVMe 1.1, which added support for SSDs with multiple PCIe ports to enable multipath I/O and namespace. Other new capabilities included autonomous power state transitions during idle time to reduce energy needs and reservations, meaning that two or more hosts could coordinate access to a shared namespace to improve fault tolerance.

- May 2013. The NVM Express Work Group held its first Plugfest to enable companies to test their products' compliance with the NVMe specification and to check interoperability with other NVMe products.

- March 2014. The NVM Express Work Group was incorporated under the NVM Express organization name. The group later became known simply as NVM Express Inc. The nonprofit organization has more than 100 technology member companies.

- Nov. 3, 2014. The NVMe 1.2 specification emerged, with enhancements such as support for live firmware updates, improved power management and the option for end-to-end data protection.

- Nov. 17, 2015. The NVM Express organization ratified the 1.0 version of the NVM Express Management Interface (NVMe-MI) to provide an architecture and command set to manage a non-volatile memory subsystem out of band. NVMe-MI enables a management controller to perform tasks such as SSD device and capability discovery, health and temperature monitoring, and nondisruptive firmware updates. Without NVMe-MI, IT managers generally relied on proprietary, vendor-specific management interfaces to enable the administration of PCIe SSDs.

- June 2017. NVM Express released NVMe 1.3. Highlights centered on sanitize operations, a new framework known as Directives and virtualization.

- July 2019. NVMe 1.4 was introduced along with enhancements and new features, including a rebuild assist, persistent event log, asymmetric namespace access, host memory buffer and persistent memory region.

- 2020. The NVMe Zoned Namespaces (ZNS) Command Set specification was ratified. This specification enables NVMe to isolate and evolve command sets for emerging technologies, including ZNS, key value and computational storage.

- June 3, 2021. NVMe 2.0 was released. The specification was restructured to enable faster and easier development of NVMe and to support a more diverse NVMe environment.

- 2022. The NVMe 2.0 specifications were twice restructured to NVMe 2.0b in January 2022 and 2.0c in October 2022. These updates were made to enable faster and easier development of NVMe technology and added features such as ZNS and endurance group management.

NVMe form factors and standards

The need for a storage interface and protocol to better exploit NAND flash's performance potential in enterprise environments was the driving force behind the development of NVMe. But reimagining the connection standard opened the door to several different types of interface implementations that could stay within the bounds of the new spec while offering a variety of implementation options.

In short order, the following flash form factors conforming to NVMe specifications emerged:

- AIC. The Add-In Card (AIC) form factor lets manufacturers create their own cards that fit into the PCIe bus without worrying about storage bay designs or similar limitations. The cards are often designed for special use cases and can include additional processors and other chips to enhance the performance of solid-state storage.

- M.2. The M.2 form factor was developed to take advantage of NAND flash's compact size and low heat discharge. As such, M.2 NVMe devices aren't intended to fit into traditional drive bay compartments, but rather to be deployed in much smaller spaces. Often described as about the size of a stick of gum, M.2 SSDs measure 22 millimeters wide and about 80 mm long, although some products might be longer or shorter.

- U.2. Unlike the M.2 form factor, U.2 SSDs were designed to fit into existing storage bays originally intended for standard SATA or SAS devices. U.2 SSDs look similar to older media, as they typically use the 2.5-inch or 3.5-inch enclosures that are familiar housings for hard disk drives. The idea was to make it as easy as possible to implement NVMe technology with as little reengineering as possible.

- EDSFF. Another less widely deployed NVMe form factor is the Enterprise and Data Center Standard Form Factor (EDSFF). The goal of EDSFF is to bring higher performance and capacities to enterprise-class storage systems. Perhaps the best-known examples of EDSFF flash are Intel's E1.L long and E1.S short flash devices, which are provided in what was originally referred to as the ruler form factor.

- U.3. This form factor can accommodate NVMe, SAS and SATA drives in the same server slot. U.3 was built using the U.2 specification and works using a tri-mode controller, SFF-8639 connectors and a universal backplane management framework. With U.3, organizations don't need separate NVMe, SAS or SATA adapters.

NVMe over fabrics

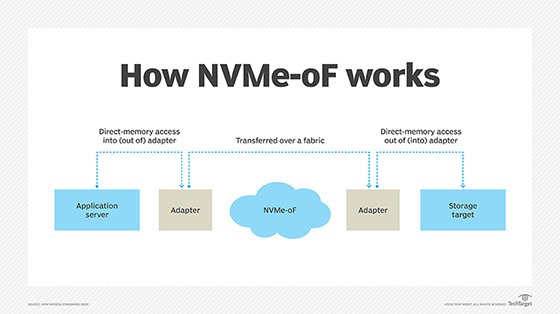

NVM Express Inc. published version 1.0 of the NVMe over fabrics (NVMe-oF) specification on June 5, 2016. NVMe-oF is designed to extend the high-performance and low-latency benefits of NVMe across network fabrics that connect servers and storage systems. The NVMe-oF 1.1 specification, which was released in 2019, offers improved fabric communication, finer-grain I/O resource management, and end-to-end flow control and support for NVMe/TCP. Both specifications also offer lower latency, improved management and provisioning of flash, and remote storage access.

Fabric transports include NVMe-oF using remote direct memory access (RDMA) and NVMe-oF. A technical subgroup of NVM Express Inc. worked on NVMe-oF with RDMA, while the T11 committee of the International Committee for Information Technology Standards developed NVMe over fibre channel.

The NVMe-oF specification is largely the same as the NVMe specification. However, one of the main differences between them is the methodology for transmitting and receiving commands and responses. Designed for local use, NVMe maps commands and responses to a computer's shared memory via PCIe. By contrast, NVMe-oF employs a message-based system to communicate between the host computer and target storage device.

The design goal for NVMe-oF was to add no more than 10 microseconds of latency for communication between an NVMe host computer and a network-connected NVMe storage device.

Uncertain where to begin with NVMe? Get answers to your most pressing questions.