Data storage management: What is it and why is it important?

Effective data storage management is more important than ever, as security and regulatory compliance have become even more challenging and complex over time.

Enterprise data volumes continue to grow exponentially. So how can organizations effectively store it all? That's where data storage management comes in.

Effective management is key to ensuring organizations use storage resources effectively, and that they store data securely in compliance with company policies and government regulations. IT administrators and managers must understand what procedures and tools encompass data storage management to develop their own strategy.

Organizations must keep in mind how storage management has changed in recent years. The COVID-19 pandemic increased remote work, the use of cloud services and cybersecurity concerns such as ransomware. Even before the pandemic, all those elements saw major surges -- and after the pandemic, these elements will still be prominent.

With this guide, explore what data storage management is, who needs it, advantages and challenges, key storage management software features, security and compliance concerns, implementation tips, and vendors and products.

What data storage management is, who needs it and how to implement it

Storage management ensures data is available to users when they need it.

Data storage management is typically part of the storage administrator's job. Organizations without a dedicated storage administrator might use an IT generalist for storage management.

The data retention policy is a key element of storage management and a good starting point for implementation. This policy defines the data an organization retains for operational or compliance needs. It describes why the organization must keep the data, the retention period and the process of disposal. It helps an organization determine how it can search and access data. The retention policy is especially important now as data volumes continually increase, and it can help cut storage space and costs.

The task of data storage management also includes resource provisioning and configuration, unstructured and structured data, and evaluating how needs might change over time.

To help with implementation, a management tool that meets organizational needs can ease the administrative burden that comes with large amounts of data. Features to look for in a management tool include storage capacity planning, performance monitoring, compression and deduplication.

Advantages and challenges of data storage management

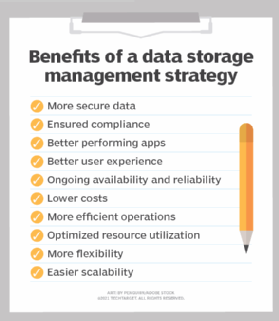

Data storage management has both advantages and challenges. On the plus side, it improves performance and protects against data loss. With effective management, storage systems perform well across geographic areas, time and users. It also ensures that data is safe from outside threats, human error and system failures. Proper backup and disaster recovery are pieces of this data protection strategy.

An effective management strategy provides users with the right amount of storage capacity. Organizations can scale storage space up and down as needed. The storage strategy accommodates for constantly changing needs and applications.

Storage management also makes it easier on admins by centralizing administration so they can oversee a variety of storage systems. These benefits lead to reduced costs as well, as admins are able to better utilize storage resources.

Challenges of data storage management include persistent cyberthreats, data management regulations and a distributed workforce. These challenges illustrate why it's so important to implement a comprehensive plan: A storage management strategy should ensure organizations protect their data against data breaches, ransomware and other malware attacks; lack of compliance could lead to hefty fines; and remote workers must know they'll have access to files and applications just as they would if in a traditional office environment.

Distributed and complex systems present a hurdle for data storage management. Not only are workers spread out, but systems run both on premises and in the cloud. An on-premises storage environment could include HDDs, SSDs and tapes. Organizations often use multiple clouds. New technologies, such as AI, can benefit organizations but also increase complexity.

Unstructured data -- which includes documents, emails, photos, videos and metadata -- has surged, and this also complicates storage management. Unstructured data challenges include volume, new types and how to gain value. Although some organizations might not want to spend the time to manage unstructured data, in the end it saves money and storage space. Vendors such as Aparavi, Dell EMC, Pure Storage and Spectra Logic offer tools for this type of management.

Object storage can provide high performance but also has challenges, including the infrastructure's scale-out nature and potentially high latency, for example. Organizations must address issues with metadata performance and cluster management.

Data storage management strategies

Storage management processes and practices vary, depending on the technology, platform and type.

Here are some general methods and services for data storage management:

- storage resource management software

- consolidation of systems

- multiprotocol storage arrays

- storage tiers

- strategic SSD deployment

- hybrid cloud

- scale-out systems

- archive storage of infrequently accessed data

- elimination of inactive virtual machines

- deduplication

- disaster recovery as a service

- object storage

Organizations may consider incorporating standards-based storage management interfaces as part of their management strategy. The Storage Management Initiative Specification and the Intelligent Platform Management Interface are two veteran models, while Redfish and Swordfish have emerged as newer options. Interfaces offer management, monitoring and simplification.

As far as media type, it's tempting to go all-flash because of its performance. However, to save money, try a hybrid drive option that incorporates high-capacity HDD and high-speed SSD technology.

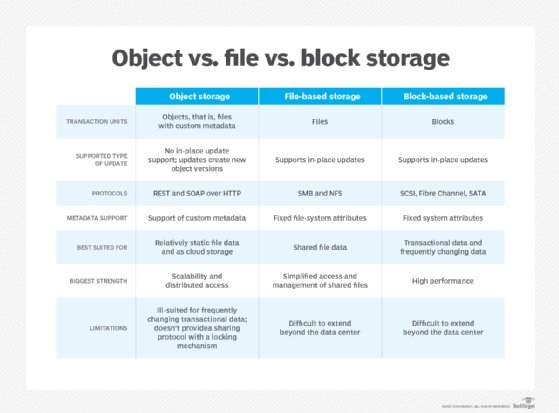

Organizations also must choose among object, block and file storage. Block storage is the default type for HDDs and SSDs, and it provides strong performance. File storage places files in folders and offers simplicity. Object storage efficiently organizes unstructured data at a comparatively low cost. NAS is another worthwhile option for storing unstructured data because of its organizational capabilities and speed.

Storage security

With threats both internal and external, storage security is as important as ever to a management strategy. Storage security ensures protection and availability by enabling data accessibility for authorized users and protecting against unauthorized access.

A storage security strategy should have tiers. Security risks are so varied, from ransomware to insider threats, that organizations must protect their data storage in a number of ways. Proper permissions, monitoring and encryption are key to cyberthreat defense.

Offline storage -- for example, in tape backup -- that isn't connected to a network is a strong way to keep data safe. If attackers can't reach the data, they can't harm it. While it's not feasible to keep all data offline, this type of storage is an important aspect of a strong storage security strategy.

Another aspect is off-site storage, one form of which is cloud storage. Organizations shouldn't assume that this keeps their data entirely safe. Users are responsible for their data, and cloud storage is still online and thus open to some risk.

The surge in remote workers produced a new level of storage security complications, including the following risks:

- less secure home office environments;

- use of personal devices for work;

- misuse of services and applications;

- less formal work habits;

- adjustments to working from home; and

- more opportunities for malicious insiders.

Endpoint security, encryption, access controls and user training help protect against these new storage security issues.

Data storage compliance

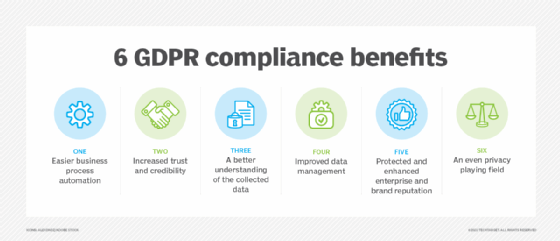

Compliance with regulations has always been important, but the need has increased in the last few years with laws such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act. These laws specifically address data and storage, so it's incumbent on organizations to comprehend them and ensure compliance.

Data storage management helps organizations understand where they have data, which is a major piece of compliance. Compliance best practices include documentation, automation, anonymization and use of governance tools.

Immutable data storage also helps achieve compliance. Immutability ensures retained data -- for example, legal holds -- doesn't change. Vendors such as AWS, Dell EMC and Wasabi provide immutable storage. However, organizations should still retain more than one copy of this data, as immutability doesn't protect against physical threats, such as natural disasters.

Data storage technology, vendors and products

Key features for overall data storage management providers include resource provisioning, process automation, load balancing, capacity planning and management, predictive analytics, performance monitoring, replication, compression, deduplication, snapshotting and cloning.

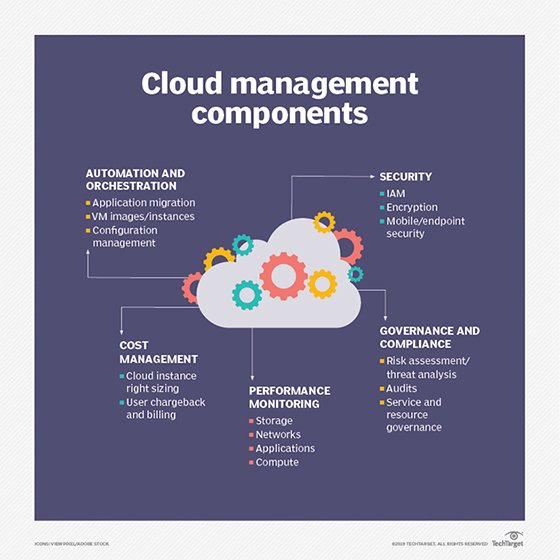

Recent trends among vendors include services for cloud storage and the container management platform Kubernetes. Top storage providers can support a range of different platforms. And though Kubernetes is more specialized, it has gained traction: Vendors such as Diamanti, NetApp and Pure Storage provide Kubernetes services.

Some form of cloud management is essentially table stakes for storage vendors. A few vendors, including Cohesity and Rubrik, have made cloud data management a hallmark of their platforms. Many organizations use more than one cloud, so multi-cloud data management is crucial. Managing data storage across multiple clouds is complex, but vendors such as Ctera, Dell EMC, NetApp and Nutanix can help.

The future of data storage management

Data storage administrators must be ready for a consistently evolving field. Cloud storage was trending up before the pandemic and has skyrocketed since -- and once organizations go to the cloud, they typically stay there. As a result, admins must understand the various forms of cloud storage management, including multi-cloud, hybrid cloud, cloud-native data and cloud data protection.

Hyper-convergence, composable infrastructure and computational storage are also popular frameworks.

In addition, admins must be aware of other new and emerging technologies that help storage management, from automation to machine learning.