hybrid cloud storage

What is hybrid cloud storage?

Hybrid cloud storage is an approach to managing cloud storage that uses both local and off-site resources. The hybrid cloud storage infrastructure is often used to supplement internal data storage with public cloud storage. Policy engines keep frequently used data on site while simultaneously moving inactive data to the cloud in a transparent manner.

Ideally, a hybrid cloud implementation behaves as if it's homogeneous storage. Hybrid cloud storage is most often implemented by using proprietary commercial storage software, by using a cloud storage appliance that serves as a gateway between on-premises and public cloud storage or by using an API to access the cloud storage.

Hybrid cloud storage is also a common way that organizations facilitate data backup processes and DR planning. Because replicating data stored in conventional on-premises data centers to a secondary data center can be tedious and expensive, adding backup data to the cloud can be a more logical approach. Additionally, cloud backup services can provide organizations with higher levels of reliability, quicker recovery times and lower costs.

Another popular use for hybrid cloud storage is to separate archival or infrequently accessed data from regularly accessed data. Keeping dormant data in primary data storage can slow down data retrieval processes, complicate data backup practices and reduce the available on-site storage capacity.

This article is part of

What is cloud backup and how does it work?

A hybrid cloud storage approach can use multiple storage components with varying needs or requirements and automatically store data in the most appropriate location based on the organization's own unique data storage and retention requirements.

Benefits and drawbacks of hybrid cloud storage

Before an organization adopts hybrid cloud storage, it should consider both the benefits and the drawbacks associated with doing so. The following are some of the primary benefits commonly associated with hybrid cloud storage:

- Businesses can move workloads between on-premises or private clouds and use the public cloud to host the data and applications that they are comfortable with being in the cloud.

- Hybrid clouds are an ideal option for companies that make extensive use of containers. Containerized workloads are portable, which means that an organization can run such a workload on premises or in the cloud and then move the workload on an as-needed basis. Moving to the cloud or migrating a containerized workload to a different cloud tends to be a relatively easy process, and cloud management platforms help administrators streamline cloud storage management behind a single pane of glass.

- Some organizations use hybrid cloud storage as a way of reducing costs. This is particularly true if a company already has the infrastructure for private cloud storage but needs more capacity. Bursting to the public cloud can help that business keep its existing private cloud relevant.

- Hybrid cloud storage offers more control, particularly for organizations that have regulatory compliance issues to contend with.

Besides these advantages, there are some potential disadvantages to consider:

- Hybrid cloud storage comes at a price. Companies must buy the hardware and software used to run the on-premises portion.

- An organization's IT staff will require a certain level of expertise to keep the private cloud portion functioning properly. Hybrid clouds also tend to be far more complex than using a native cloud service due to the integration components that are used to make on-premises storage services mesh with various public cloud computing platforms.

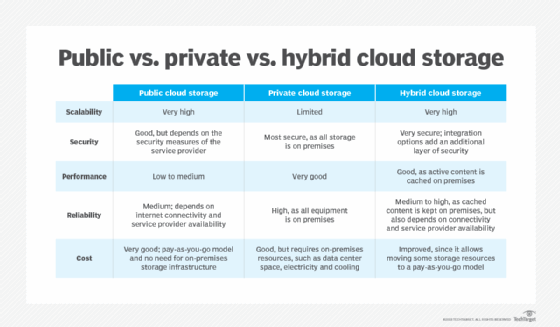

Hybrid vs. public and private cloud storage

The decision to use a public, private or hybrid cloud storage option depends on what an organization needs from its cloud. Businesses with a great deal of mission-critical, proprietary data might want to store that information on premises or in a private cloud to keep it out of the hands of competitors. That isn't to say that those particular businesses can't also use a public cloud. If a private cloud can't meet their needs, a hybrid approach might work well.

Organizations that don't need to keep their data tightly secured or regulated can use the public cloud, which might be their most cost-effective option.

For more on cloud backup and how it works, read the following articles:

Full vs. incremental vs. differential: Comparing backup types

21 cloud backup services for businesses to consider

Types of backup explained: Full, incremental, differential, etc.

The 7 critical backup strategy best practices to keep data safe

Hybrid cloud storage examples

Some of the most popular hybrid cloud storage providers are AWS, Cisco, Dell, HPE, IBM, Microsoft, Rackspace and VMware. Here are a few examples of hybrid cloud storage products:

- Dell Apex. This product is designed to provide a more consistent experience across public clouds and the private cloud. It also brings consumption-based pricing to the data center.

- AWS Storage Gateway. This product connects on-premises applications and systems to cloud storage to provide centralized data managements and seamless integrations.

- IBM Spectrum. The entire IBM Spectrum line of products is designed for managing complex storage architectures while also keeping data secure. The following products are included in IBM's Spectrum line:

- IBM Spectrum Storage Suite

- IBM Spectrum Control

- IPM Spectrum Storage Insights

- IBM Spectrum Copy Data Management

- IBM Spectrum Protect

- IBM Spectrum Protect Plus

- IBM Spectrum Archive

- IBM Spectrum Sentinel

- IBM Spectrum Virtualize for Public Cloud

- IBM Cloud Object Storage

- IBM Spectrum Fusion

- IBM Storage Suite for IBM Cloud Paks

- IBM Spectrum Discover

- IBM Spectrum Scale

- IBM Spectrum Conductor

- IBM Spectrum Symphony

- IBM Spectrum LSF Suites

- IBM Spectrum MPI

Top use cases of hybrid cloud storage

There are numerous potential use cases for hybrid cloud storage, including backing up data to the cloud and disaster recovery/workload failover to the public cloud. The following are more of the most common use cases:

- Data archiving. Organizations will often move cold, aging or archival data to a cloud-based storage tier where the data can be better protected or stored at a lower cost.

- Increasing capacity/burst capacity. Public cloud storage can be used to supplement the storage that is available on premises, resulting in greater overall capacity.

- Data versioning. Cloud storage can be used for immutable data storage, which helps protect against ransomware, or to retain multiple versions of files.

- Workload migration. Workloads, particularly those associated with containers, can be migrated to the optimal location based on cost and performance requirements.

- Data migration. Hybrid clouds give organizations the flexibility to place data in close proximity to the workloads that will be using the data.