kentoh - Fotolia

Why is a data archive plan important?

A data archiving plan might not be simply important for your organization -- it could be necessary, given legal and compliance requirements. Make sure you're ahead of the game.

Just as a data backup plan is an essential part of any IT organization, so, too, is a data archive plan, especially if you have a broad assortment of electronic and non-electronic items to protect.

Although a data protection and management plan addresses the protection, security and access of data, when coupled with an archiving plan, it also provides storage capabilities that you can use over extended time frames.

Reasons for archiving data include the following:

- corporate policy;

- regulatory requirements;

- legal requirements, such as e-discovery;

- future audit requirements;

- preservation of company business data; and

- protection of different data types.

A data archive plan provides specific rules, standards and guidelines for the following:

- overall data archiving strategy;

- types of data to be archived;

- data retention periods;

- archiving technologies to be used, such as deduplication;

- search and access methodologies;

- access permissions;

- security requirements;

- protection of data integrity;

- storage resources;

- data and document management techniques;

- specifications for archival software; and

- responding to e-discovery and audit requests.

When preparing a data archive plan, run through a four-step process. Use your data backup and data management plans as starting points. Analyze the types of data and information that you must place into longer-term storage. Establish metrics for retention, access and other activities. Finally, consult with subject matter experts and management in your organization to make sure you have identified the necessary data and met archiving requirements.

Aside from data that you must retain for several years -- depending on your data retention policy -- you might also need to protect and retain additional non-electronic information for periods of time. Carefully consider storage options for such archives.

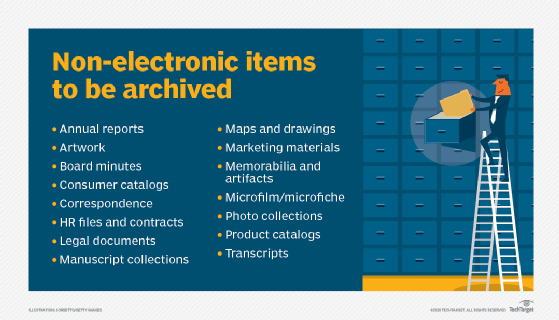

The table below lists examples of non-electronic items that you may need to archive.

You can scan and digitize certain items for storage. However, your organization might also need to retain original documents for legal or regulatory purposes. Original content, such as paintings and other artwork, might not lend itself to digital storage and could also have strict environmental requirements for long-term archiving.

Creation and preservation of metadata is also a key consideration for data archiving. Preservation of metadata must be a consideration when developing a data archive plan.