RAID 5 vs. RAID 6: Capacity, performance, durability

RAID 5 and RAID 6 provide solid data protection, but both have their pros and cons. Find out what to compare when deciding between RAID 5 vs. RAID 6 in this tip.

To provision storage for a workload, IT pros must choose the right storage architecture. This decision may come down to choosing between RAID 5 vs. RAID 6.

RAID, or redundant array of independent disks, enables organizations to store data on multiple HDDs or SSDs and can help protect data in the event of a drive failure. RAID 5 uses a disk striping with parity and is ideal for application and file servers, while RAID 6 uses two parity stripes and often protects mission-critical data.

Neither RAID 5 nor RAID 6 is clearly superior to the other, and there are many variables to consider in order to make an informed decision about which RAID level to implement. To choose between RAID 5 vs. RAID 6, admins must think about the business use case, as well as the tradeoffs involved in choosing one level over the other.

Manage disk failures

RAID 5 and RAID 6 use redundancy to guard against hard disk failure. This redundancy takes the form of parity information that is written to each disk within the RAID set. If a disk within the set fails, an administrator can replace the disk and then the RAID array. The RAID array then uses the parity information it stores on the remaining disks to re-create the failed disk's contents on the new disk.

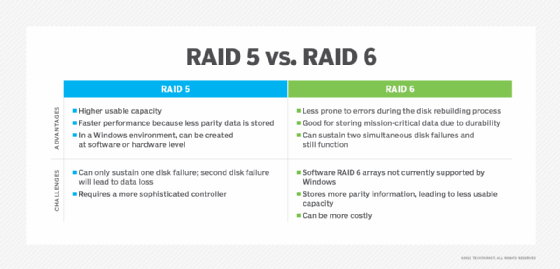

The primary difference between RAID 5 and RAID 6 is that a RAID 5 array can continue to function following a single disk failure, but a RAID 6 array can sustain two simultaneous disk failures and still continue to function. RAID 6 arrays are also less prone to errors during the disk rebuilding process.

Because RAID 6 arrays are more durable than their RAID 5 counterparts, they are often a good choice to store mission-critical data. Even so, this increased durability comes at a cost.

Overhead and usable capacity

RAID 5 and 6 arrays store parity information alongside the actual data, so the disk's full capacity is not available for data storage. In a RAID 5 array, the overhead associated with storing this parity information is the equivalent to one full disk. If, for example, a RAID 5 array contains three 1 TB hard disks, then the array's usable capacity will be 2 TB, not 3 TB.

RAID 6 arrays store more parity information than RAID 5 arrays, so 6 arrays have less usable capacity. A RAID 6 array's overhead is the equivalent to the full capacity of two of the array's disks. If, for example, a RAID 6 array contained four 1 TB disks, then the array would have a usable capacity of 2 TB. Incidentally, a RAID 6 array must have at least four disks, while RAID 5 arrays have a three-disk minimum.

Performance

RAID 5 arrays have relatively slow write performance because parity information must be written to the disks alongside the actual data. RAID 6 arrays are even slower because they store a greater volume of parity data than RAID 5 arrays do.

Organizations must consider how they will implement the RAID 5 or RAID 6 array. RAID 5 arrays can be created either at the hardware level or as a software array in a Windows environment. In fact, Windows has had the ability to create software RAID 5 arrays ever since the days of Windows NT.

However, Windows does not natively support software RAID 6 arrays, although Storage Spaces can achieve similar functionality. RAID 6 arrays will generally need to be created at the hardware level.