alex_aldo - Fotolia

New WekaIO file system incorporates flash, object store

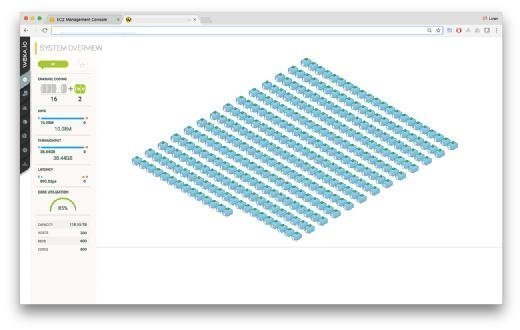

WekaIO launched its scale-out file system, which is optimized for server-based flash storage and designed to tier data to cheaper object storage on premises and in public clouds.

WekaIO emerged from stealth with a scale-out parallel file system designed to take advantage of server-based flash storage for performance and high-capacity object storage in public and private clouds for colder data.

San Jose, California-based WekaIO's Matrix software-defined storage launched this week includes a Trinity management platform to facilitate oversight of potentially thousands of nodes. Matrix bundles a networking stack to reduce latency, boost throughput and convey file system semantics more efficiently through proprietary drivers integrated into the Linux kernel, according to WekaIO CTO Liran Zvibel.

"By doing basically our own real-time operating system and running our own networking stack and our own I/O stack, we were able to get software-defined storage to actually perform," Zvibel said. "The Linux kernel was never intended to be used as a base for a storage service."

Zvibel said the WekaIO network stack is similar to NVMe over Fabrics (NVMe-oF) with two key distinctions: It uses standard Ethernet and, unlike NVMe-oF, doesn't require remote direct memory access (RDMA), special switches or NICs. He said WekaIO also enables the use of cheaper SAS and SATA drives in addition to ultra-low-latency NVMe-based PCI Express SSDs that NVMe-oF supports.

WekaIO software pools local SSDs in servers and presents flash storage as a single namespace to host applications. The file system's tiering layer extends the global namespace beyond pooled NAND flash capacity. Cold data gets offloaded to less expensive Amazon S3 API-or OpenStack Swift-compliant cloud object storage, on premises or in the public cloud.

The MatrixFS file system builds in N+4 data protection, with four data and two protection pieces, for withstanding four simultaneous device failures. Zvibel said N+4 uses algorithms reminiscent of erasure coding but addresses slow rebuild times and poor small file performance issues. N+4 saves over replication for flash and network resources, according to Zvibel.

To accommodate N+4, the minimum WekaIO system configuration is six SSDs. WekaIO stands behind a theoretical scaling limit of 20,000 back-end storage nodes with SSD agents, although the company has only tested up to 1,200.

Not all WekaIO nodes need run storage services, so customers can scale cluster storage and compute independently. The theoretical scaling limit for WekaIO front ends -- clients that understand cluster and data placement -- is about 100,000, according to Zvibel. Customers have the option to run the software on bare metal servers, VMs or Docker containers.

WekaIO's data service front end supports NFS for legacy hosts, SMB for Windows clients and Hadoop Distributed File System (HDFS) for Hadoop applications.

Potential use cases

WekaIO envisions use cases for applications that require significant bandwidth, high IOPS and low latency in industries such as media and entertainment, life sciences, engineering, design and manufacturing.

Zvibel said he sees IBM's Spectrum Scale -- formerly General Parallel File System (GPFS) -- as WekaIO's main competitor. He claimed MatrixFS enables higher performance in smaller clusters and greater parallelism than GPFS. He said the WekaIO system writes data simultaneously to many flash devices, and reads a megabyte of data as 256 4K blocks concurrently. He also said every node on a WekaIO cluster is part of the distributed file system, understands data placement and contacts correct SSD agents directly.

"GPFS has clients that are similar to our clients, and they talk to a small number of NSD [Network Shared Disk] servers that front the metadata service," he said. "Actually, only the metadata servers themselves are connected to the SAN devices, and they run the parallel requests. In our case, any client can parallel request."

The WekaIO system can parallelize metadata operations and have up to 64,000 metadata servers spread across 20,000 physical servers, according to Zvibel.

"A lot of customers that currently don't get what they need from the NetApps and Isilons didn't go the GPFS way because GPFS is scary," said Zvibel, insinuating GPFS is complicated to use.

Other potential WekaIO challengers include startups Elastifile, Hedvig and Rozo Systems, as well as object storage vendors with NFS interfaces such as Caringo, Red Hat Ceph Storage and Scality. Open source Lustre is another potential competitor, but "it's too complex for the non-Ph.D. users," Zvibel said.

WekaIO will initially target large-scale deployments in need of high-performance storage "because it's most lucrative to find people with big problems," he said.

The annual Matrix software capacity license lists at $1,000 per TB when deployed in dedicated storage servers. In hyper-converged mode, the list price is $10,000 per node for up to two cores with unlimited SSD capacity, or $12,500 per node for up to eight cores and unlimited capacity.

"We scale our performance by cores," said Zvibel, because we are able to parallelize our work on cores. The more cores you run it on, the higher performance you get in a linear fashion."

Potential differentiators

George Crump, president of analyst firm Storage Switzerland LLC, said potential WekaIO differentiators include flash optimization, hyper-converged and bare-metal configuration support, and variable compute performance under customer control.

"Usually with most flash systems, you hard set the amount of storage and compute they're going to get. You lock them in on two cores per node across the hyper-converged cluster," Crump said. "With Weka, you can say, 'For now, just use one core from every node.' And when 1,000 containers are created, you could also spin up 100 cores to handle the extra I/O load. Or, you could have it automatically spin up extra cores as the I/O hits certain levels."

Scott Sinclair, a senior analyst at Enterprise Strategy Group, said his company's lab validated that WekaIO's performance scales to millions of IOPS. He said another potential benefit is its ability to "take advantage of existing footprint within your environment" and use the cloud for additional capacity. A potential disadvantage could be complexity given the degree of flexibility that WekaIO offers.

WekaIO raised $32.25 million in Series A and B funding and has engineering offices in Israel. Zvibel, chief product officer Omri Palmon and chief architect Maor Ben-Dayan founded WekaIO in 2013, after IBM acquired their first venture, XIV in 2008. WekaIO president and CEO Michael Raam headed SSD controller maker SandForce, a 2012 LSI acquisition.