Fibre Channel

What is Fibre Channel?

Fibre Channel is a high-speed networking technology primarily used for transmitting data among data centers, computer servers, switches and storage at data rates of up to 128 Gbps. It was developed to overcome the limitations of the Small Computer System Interface (SCSI) and High-Performance Parallel Interface (HIPPI) by filling the need for a reliable and scalable high-throughput and low-latency protocol and interface.

Fibre Channel is especially suited for connecting servers to shared storage devices and interconnecting storage controllers and drives. The Fibre Channel interface was created for storage area networks (SANs). Fibre Channel devices can be as far as 10 kilometers -- approximately six miles -- apart if multimodal optical fiber is used as the physical cable medium. Optical fiber is not required for shorter distances. Fibre Channel also works using coaxial cable and ordinary telephone twisted pair. When using copper cabling, however, it is recommended that distances not exceed 100 feet.

Fibre Channel offers point-to-point, switched and loop interfaces to deliver lossless, in-order, raw block data. Because Fibre Channel is many times faster than SCSI, it has replaced that technology as the transmission interface between servers and clustered storage devices. Fibre Channel networks can transport SCSI commands and information units using the Fibre Channel Protocol (FCP), however. It is designed to interoperate with not just SCSI but also the IP and other protocols.

Fibre Channel is also an option, along with remote direct memory access over Ethernet and InfiniBand, for high-performance computing environments transporting data under the nonvolatile memory express over Fabrics (NVMe-oF) specification to improve flash storage performance over a network.

NVM Express Inc. is the 100+ member nonprofit organization that developed NVMe-oF and published version 1.0 of the specification on June 5, 2016. The NVMe-oF 1.1 specification was released in 2019 to add finer control over I/O resource management, end-to-end flow control, support for NVMe/TCP and improved fabric-based communication. The current version – revision 1.1a – was published in 2021.

The T11 committee of the International Committee for Information Technology Standards (INCITS) developed a frame format and mapping protocol for applying NVMe-oF to Fibre Channel. It finalized and submitted the first version of the mapping protocol, under the FC-NVMe standard banner, to INCITS in August 2017 and came out with FC-NVMe 2 in 2020.

Standards for Fibre Channel are specified by the Fibre Channel Physical and Signaling standard and American National Standards Institute (ANSI) X3.230-1994, which is also International Organization for Standardization 14165-1.

Fibre Channel history and developments

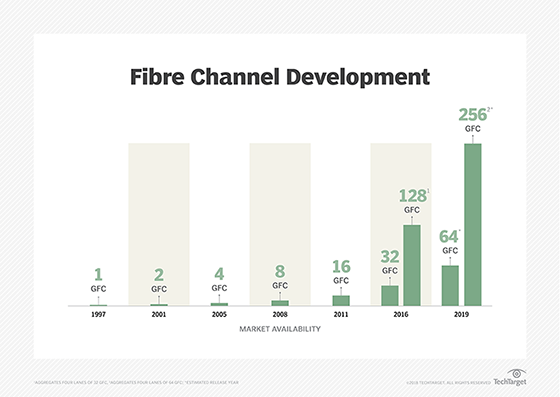

Development of the FCP started in 1988 as part of the Intelligent Peripheral Interface (IPI) Enhanced Physical Project. The first draft of the standard was completed in 1989. ANSI approved the Fibre Channel standard in 1994. Fibre Channel was the first serial storage transport to hit gigabit speeds; its performance has consistently doubled every few years for the last 20 years.

Historically, Fibre Channel networking speeds have been labeled in Gbps -- 1 Gbps, 2 Gbps, 4 Gbps, 8 Gbps, 16 Gbps, 32 Gbps, 64 Gbps and 128 Gbps -- representing throughput performance. The naming convention was changed to Gigabit Fibre Channel (GFC) -- 1GFC, 2GFC, 4GFC, 8GFC, etc. -- by the Fibre Channel Industry Association (FCIA). Each Fibre Channel is backward-compatible to at least two previous generations. For example, 8GFC maintains backward compatibility to 4GFC and 2GFC.

With Generation 5 Fibre Channel, called 16GFC, the encoding mechanism changed. Gen 5 performs at a line rate of 15.025 Gbaud with single-lane throughput of 1,600 MBps and bidirectional throughput of 3,200 MBps, according to FCIA's roadmap.

Gen 6 Fibre Channel added features such as N_Port ID virtualization, better energy efficiency and forward error correction to improve the reliability of Fibre Channel links and prevent application performance degradation and outages by avoiding data stream errors. It comes in 32GFC and 128GFC flavors. The former is single lane at a line rate of 28.05 Gbaud with 6,400 MBps throughput; the latter, with parallel functionality, has four lanes (28.5 Gbaud x 4) for 112.2 Gbaud line rate performance and 25,660 MBps throughput.

The FCIA roadmap extends well into the future to 1 Terabit Fibre Channel (1TFC), which is slated to perform at 204,800 MBps and have its T11 specification completed in 2029. Between that and Gen 6 Fibre Channel are generations that include single-lane 64GFC (57.8 Gbaud, 12,800 MBps) and four-lane 256GFC (4 x 57.8 Gbaud, 51,200 MBps). The roadmap also lists more advanced 128GFC and 256GFC versions with estimated T11 specification completion dates of 2023 and 2026, respectively, and a 512GFC (2026 for T11, 102,400 MBps) edition.

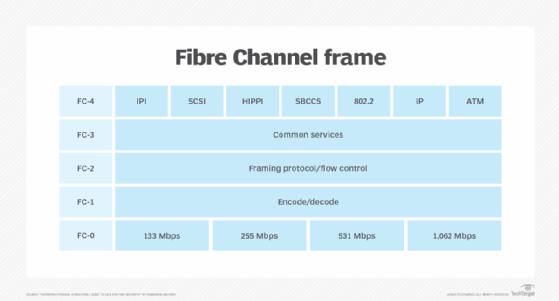

Fibre Channel layers

Fibre Channel defines layers of communication similar to, but different from, the Open Systems Interconnection (OSI) model. Like OSI, Fibre Channel splits the process of network communication into layers, or groups, of related functions. OSI includes seven such layers, while Fibre Channel has five layers. IP networks use packets, and Fibre Channel relies on frames to foster communication between nodes.

The five layers of a Fibre Channel frame are the following:

- Upper Layer Protocol Mapping: FC Layer 4

- Common Services Layer: FC Layer 3

- Signaling/Framing Layer: FC Layer 2

- Transmission Layer: FC Layer 1

- Physical Layer: FC Layer 0

Within a Fibre Channel topology, each of the five frame layers works with the one below it and above it to deliver distinct functions. Innovation is a central advantage of a layered design – a new technology can be introduced into a layer without disrupting or requiring the redesign of the other layers.

For example, cabling, such as copper or optical cables, fit into the physical layer. If a new cable design or technology is introduced, it would only need to fit the compatibility requirements of Layer 0.

Fibre Channel components

The four main Fibre Channel components are the following:

Switches. A Fibre Channel switch enables a high-availability, low-latency, high-performance and lossless data transfer in a Fibre Channel fabric. It determines the origin and destination of data packets to send to their intended destination. As the main components used in a SAN, Fibre Channel switches can interconnect thousands of storage ports and servers. Features in Fibre Channel director-class switches include zoning to block unwanted traffic and encryption.

Host bus adapters (HBAs). Fibre Channel HBAs are cards that connect servers to storage and network devices. An HBA is similar in principle to an Ethernet network adapter. It offloads server processing of data storage tasks and improves server performance. When Fibre Channel and Ethernet networks first converged, HBA vendors developed converged network adapters (CNAs) that combine the functionality of a Fibre Channel HBA with an Ethernet network interface card (NIC).

Ports. Fibre Channel switches and HBAs connect to each other and to servers through physical or virtual ports. Data in a Fibre Channel fabric node is sent and received through ports that come in an assortment of logical configurations. Fibre Channel switches can have fewer than 10 ports to hundreds of ports in a chassis. The connections between ports and HBAs are established using physical copper or optical cables.

Software. Fibre Channel installations depend on a software layer for device drivers, along with control and management between hosts, ports, and devices. Software offers a visualization of the Fibre Channel environment and enables oversight and control of Fibre Channel resources from a central console.

Fibre Channel design and configuration

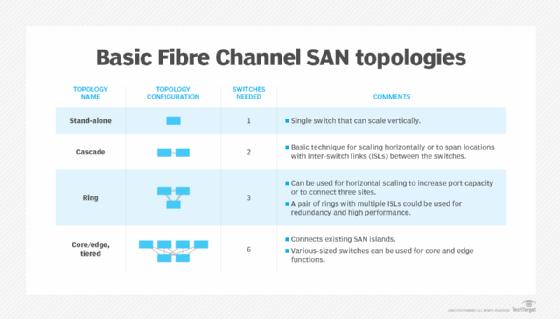

The FCP supports three main topologies to link Fibre Channel ports together and enable devices, such as switches and HBAs, to connect servers to a network and storage.

Point-to-point. The simplest and most limited Fibre Channel topology connects two devices or ports together, such as linking a host server to direct-attached storage.

Arbitrated loop. Devices are linked in a circular, ringlike manner. Each node or device on the ring sends data to the next node. Bandwidth is shared among all devices. If one device or port fails, all of them could be interrupted unless a Fibre Channel hub is used to connect multiple devices and bypass failed ports. The maximum number of devices that can be in an arbitrated loop is 127; for practical reasons, the number is limited to far fewer.

Switched fabric. All devices in this topology connect and communicate via switches, which optimizes data paths using the Fabric Shortest Path First routing protocol and lets multiple pairs of ports interconnect concurrently. Ports do not connect directly but, instead, flow through switches. When one port fails, the operation of other ports should not be affected. All nodes in the fabric work simultaneously, increasing efficiency, while redundancy of paths between devices increases availability. Switches can be added to the fabric without taking the network down.

Interconnection types within the switched fabric topology include the following:

- Single-switch topology is the simplest switch topology. There is just one switch and no interswitch links. This topology is seldom used because it presents a single point of failure.

- Cascade topology lines switches up and connects them together one after the other in the manner of a queue. Adding an interswitch link to interconnect the first and last switch in the cascade closes the loop to form a switched fabric ring topology.

- Mesh topology is when every switch in the Fibre Channel fabric connects to every other switch.

- Core-edge topology takes a tiered approach to a mesh topology by using higher-performance director switches as core switches. It connects servers to the edge fabric and storage to core switches. These, in turn, are interconnected to facilitate communication between servers and storage.

- Edge-core-edge topology enables storage and servers to connect to the edge fabric, but core switch communication is used only to connect and scale edge switches. This topology configuration helps extend the flow of SAN traffic across long distances and eases the management of storage and servers when each are at different edges of a Fibre Channel fabric.

Fibre Channel vs. iSCSi SANs

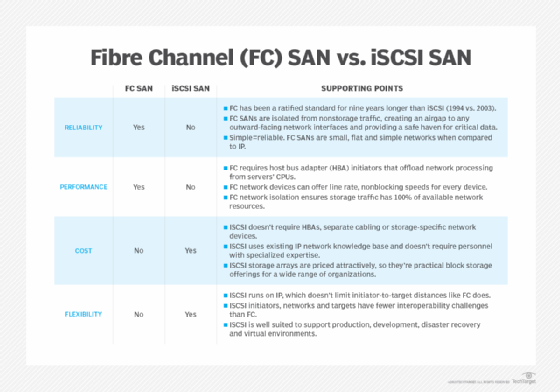

As a Layer 2 switching technology, hardware handles the entire protocol in Fibre Channel fabrics. By contrast, internet SCSI (iSCSI) is a Layer 3 switching technology that runs over Ethernet. Here, software, hardware or both software and hardware can control the protocol.

Ethernet-based iSCSI transports SCSI packets over a TCP/IP network. Because iSCSI uses commonplace Ethernet, it doesn't require buying costly and often complex adapters and network cards. This makes iSCSI cheaper and easier to deploy.

Most data centers with a high-capacity Fibre Channel SAN, or FC SAN, for mission-critical workloads prefer Fibre Channel networking over iSCSI. That's mostly because Fibre Channel is a proven technology that data center admins know will reliably handle demanding workloads without dropping data packets.

Specialized installation and configuration skills are required to properly get a FC SAN up and running. An IT staff can implement an iSCSI SAN on an existing network using common switches and Ethernet NICs. With iSCSI, there is only one network to build and manage, while Fibre Channel requires two networks: an FC SAN for storage and an Ethernet network for everything else.

All major storage vendors offer iSCSI SAN arrays in addition to their Fibre Channel mainstays. Some sell unified, multiprotocol storage platforms with both iSCSI and Fibre Channel.

Fibre Channel vs. Ethernet

Fibre Channel and Ethernet are two different types of network technologies that traditionally serve different purposes across the enterprise.

Fibre Channel

In general terms, Fibre Channel supports the in-order and lossless transmission of raw block data. These capabilities have made it indispensable for high-performance data handling between servers and storage subsystems.

Fibre Channel networks operate at powers-of-two-based speeds, ranging from 1 Gbps to 128 Gbps, with 256 Gbps and 512 Gbps versions coming in the future.

Fibre Channel is light on security features, but most deployments are isolated within the enterprise and are not connected to other networks or the internet. This provides natural security from intrusion and hacking.

Ethernet

By comparison, Ethernet provides general purpose, out-of-order, loss-tolerant packet networking technology used in local area networks (LANs) and wide area networks. Ethernet is broadly available and highly standardized. The standards it's based on include IEEE 802.3 and the conventional OSI layer model. Ethernet also supports long cable distances and handles speeds ranging from 1 Gbps to 400 Gbps.

Its wide adoption and vulnerable TCP/IP protocol make Ethernet networks and connected systems more vulnerable to attack, requiring additional security measures. Still, Ethernet is often the network of choice for basic storage connectivity, such as network-attached storage systems.

Fibre Channel over Ethernet (FCoE)

Given the tradeoffs between Fibre Channel and Ethernet, designers have sought ways to connect the two different technologies. Fibre Channel over Ethernet is a network approach that encapsulates Fibre Channel data and data formats over common 10 Gbps and faster Ethernet networks. This essentially replaces Layer 0 and Layer 1 of the Fibre Channel stack with corresponding Ethernet layers and allows Fibre Channel commands and data to travel over Ethernet LANs.

However, FCoE is not a routable protocol and will not work over routed IP networks. FCoE is an international standard covered in the T11 FC-BB-5 standard published in 2009. When implemented properly, FCoE integrates Fibre Channel with Ethernet-based applications and management software.

FCoE adds three major capabilities to allow Fibre Channel operation over Ethernet:

- Fibre Channel frames must be encapsulated into Ethernet frames.

- FCoE must facilitate a lossless environment where frames are not lost and retransmitted in response to network congestion.

- FCoE must map Fibre Channel N_port IDs to conventional Ethernet MAC addresses. Computers can access FCoE using CNAs, which provide both Fibre Channel HBA and Ethernet NIC functions on the same device.

Fibre Channel vs. fibre optic

Fibre Channel supports both copper and optical fiber cabling depending on the deployment. Fibre Channel copper cabling is well-suited for short-distance connections up to about 100 feet. Optical connections using fibre optic cables are intended for medium- to long-distance connections, up to about six miles, such as campus networks and metropolitan area networks.

The choice of cable is governed by the HBA port choice and the ports available on Fiber Channel switches and storage gear. In many cases, hardware will provide port options for both copper and fibre cabling that users can deploy as-required.

Learn more about seven of the most common storage network protocols, including Fibre Channel, FCoE and iSCSI.