hypervisor

What is a hypervisor?

A hypervisor is software that facilitates running multiple virtual machines (VMs) with their own operating systems on a single computer host's hardware. The host machine and its physical hardware resources independently operate one or more VMs as guest operating systems. Multiple guest VMs share the system's physical compute resources, including processor cycles, memory space and network bandwidth.

Hypervisors are also known as virtual machine monitors. They can help consolidate space on a server or run multiple isolated applications on one server. Hypervisors are commonly managed using specialized software, such as VMware's vCenter, which manages vSphere environments.

Types of hypervisors

Hypervisors are traditionally implemented as a software layer, such as VMware vSphere or Microsoft Hyper-V. But they can also be implemented as code embedded in a system's firmware. There are two principal types of hypervisors: Type 1 and Type 2 hypervisors.

Type 1 hypervisors

Type 1 hypervisors are deployed directly atop the system's hardware without any underlying operating system or other software. They have direct access to system resources and are called bare-metal hypervisors. They are the most common type of hypervisor for enterprise data centers. Examples include vSphere and Hyper-V.

Type 2 hypervisors

Type 2 hypervisors run as a software layer atop a host operating system and are usually called hosted hypervisors. Examples include VMware Workstation Player and Parallels Desktop. Hosted hypervisors are often found on endpoints such as personal computers.

What are hypervisors used for?

Virtualization technology adds a crucial layer of management and control over the data center and enterprise environment, which makes hypervisors a key part of any system administrator or system operator's job. Those IT professionals must understand how the hypervisor works and how to perform management tasks, such as VM configuration and monitoring, operating system management, migration and snapshots.

The role of a hypervisor is also expanding. For example, storage hypervisors virtualize all the storage resources in an environment to create centralized storage pools. Admins can then provision those pools without concerning themselves with the physical location of the storage. Storage hypervisors are a key element of software-defined storage.

Hypervisors also enable network virtualization so admins can use software to create, change, manage and remove networks and network devices without ever touching physical network devices. As with storage, network virtualization is a key element in broader software-defined networking and software-defined data center platforms.

Benefits of hypervisors

Among the benefits hypervisors provide are the following:

- Efficiency. A physical host system running multiple guest VMs can vastly improve the efficiency of a data center's underlying hardware. Physical, nonvirtualized servers might only be able to host one operating system and a single application. A hypervisor lets the system host multiple VM instances on the same physical system, each running an independent operating system and application. This approach uses far more of the system's available compute resources.

- Mobility. VMs are also mobile. The abstraction that takes place in a hypervisor makes the VM independent of the underlying hardware. Traditional software can be coupled to the underlying server hardware, meaning moving the application to another server requires time-consuming and error-prone reinstallation and reconfiguration of the application. The hypervisor makes the underlying hardware details irrelevant to the VMs. Admins can move or migrate VMs between any local or remote virtualized servers with sufficient computing resources with effectively zero disruption to the VM. This feature called live migration.

- Security and reliability. Even though VMs run on the same physical machine, a hypervisor keeps them logically isolated from each other. A VM has no native knowledge of or dependence on any other VMs. An error, crash or malware attack on one VM doesn't proliferate to other VMs on the same or other machines. This makes hypervisor technology extremely secure and reliable.

- Version protection. VM snapshots let admins instantly revert a VM to a previous state. Snapshots -- or checkpoints, as Microsoft refers to them -- aren't intended to substitute for backups. In fact, snapshots act as a protective mechanism, especially when performing maintenance on a VM. If an admin upgrades a VM's operating system, they can take a snapshot prior to performing the upgrade. If the upgrade fails, the snapshot can be used to restore the operating system and instantly return the VM to its previous state.

Hypervisor security concerns and practices

The hypervisor security process must ensure the hypervisor is secure throughout its lifecycle, including during development and implementation. If an attacker gains unauthorized access to the hypervisor, management software or the software that orchestrates the virtual environment, then they could potentially gain access to all data stored in each VM.

Other possible vulnerabilities in a virtualized environment include shared hardware caches, the network and potential access to the physical server.

The following security practices can help mitigate the risks of unauthorized access to a hypervisor:

- Limiting the users in a local system.

- Limiting attack surfaces by running hypervisors on a dedicated host that doesn't perform any additional roles.

- Adhering to patch management best practices to keep systems updated.

- Configuring the host to act as a part of a guarded fabric.

- Encrypting VMs to prevent rogue admins from gaining access.

- Using BitLocker or a similar encryption service to encrypt the storage on which the VMs resides.

- Using roll-based access control to limit administrative privileges.

- Using dedicated physical network adapters for management, VM migration and cluster traffic.

- Running periodic security tests to ensure the hypervisor is secure.

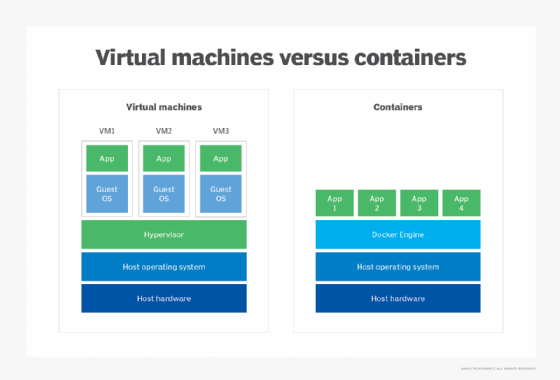

Containers vs. hypervisors

Hypervisors host kernel-based VMs are designed to create an environment that mimics a collection of physical machines. Each VM contains its own independent operating system. By contrast, containers can share one operating system kernel known as a base image. Each container runs a separate application or microservice but depends on the underlying base image to operate.

Microsoft offers two different container options. A traditional container architecture can run on top of Windows Server. A Hyper-V container deployment can act as a hybrid environment. Hyper-V uses a VM as the basis for its container infrastructure.

Kubernetes has become the standard tool for managing Linux containers across private, public and hybrid cloud environments. Kubernetes is an open source system that Google created; it was originally launched in 2015. It automates the scheduling, deployment, scaling and maintenance of containers across cluster nodes.

Cloud hypervisors

Cloud service providers use hypervisor technology to facilitate access to cloud applications via virtual environments. Cloud hypervisors provide access to a variety of applications. They can help quickly migrate applications to a cloud environment, making hypervisor technology a boon for many digital transformation initiatives.

Among major cloud computing service providers, Amazon Web Services uses the Nitro Hypervisor, Microsoft Azure uses Azure Hypervisor and Google uses the Compute Engine.

Hypervisor vendors and market

Numerous hypervisors are available, ranging from free platforms to enterprise-grade products. The following is a sampling of hypervisor providers and their systems:

- Citrix Hypervisor.

- Linux KVM (Kernel-based VM).

- Nutanix AHV (Acropolis Hypervisor).

- Microsoft Hyper-V.

- Oracle VM Server.

- Oracle VM VirtualBox.

- VMware ESXi.

History of virtualization and hypervisors

The 1960s and 1970s saw the creation of the earliest hypervisors. In 1966, IBM released its first production computer system -- the IBM System/360-67 -- which was capable of full virtualization as well as timesharing services. IBM also began producing its CP-40 system in 1967. This system ran off a modified S/360-40 system that provided virtualization capabilities. It also let multiple user applications run concurrently for the first time. IBM released the Control Program/Cambridge Monitor System in 1968; it was used through the 1970s.

In 1970, IBM released System/370 and added virtual memory support in 1972. Since then, virtualization has been a feature in all IBM systems. Around this time, community members also began using open source projects to further develop virtual systems with hypervisors.

IBM introduced the Processor Resource/System Manager hypervisor in 1985; it could manage logical partitions. During the mid-2000s, more operating systems, including Linux, Unix and Windows, began supporting hypervisors. Lower cost hypervisors also began appearing with better hardware and improved consolidation abilities. In 2005, vendors began supporting virtualization of x86 products.