charge-coupled device

What is a charge-coupled device (CCD)?

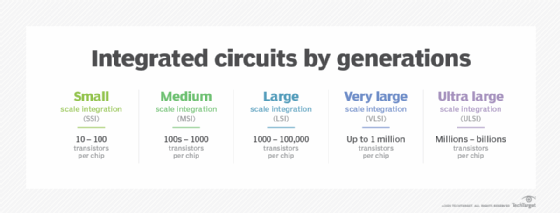

A charge-coupled device (CCD) is a light-sensitive integrated circuit that captures images by converting photons to electrons. A CCD sensor breaks the image elements into pixels. Each pixel is converted into an electrical charge whose intensity is related to the intensity of light captured by that pixel.

For many years, CCDs were the sensors of choice in a wide range of devices, but they're steadily being replaced by image sensors based on complementary metal-oxide-semiconductor (CMOS) technology.

The CCD was invented in 1969 at Bell Labs -- now part of Nokia -- by George Smith and Willard Boyle. However, the researcher's efforts were focused primarily on computer memory, and it wasn't until the 1970s that Michael F. Tompsett, also with Bell Labs, refined the CCD's design to better accommodate imaging.

After that, the CCD continued to be improved by Tompsett and other researchers, leading to enhancements in light sensitivity and overall image quality. The CCD soon becoming the primary technology used for digital imagery.

What does a charge-coupled device do?

Small, light-sensitive areas are etched into a silicon surface to create an array of pixels that collect the photons and generate electrons. The number of electrons in each pixel is directly proportional to the intensity of light captured by the pixel. After all the electrons have been generated, they undergo a shifting process that moves them toward an output node, where they're amplified and converted to voltage.

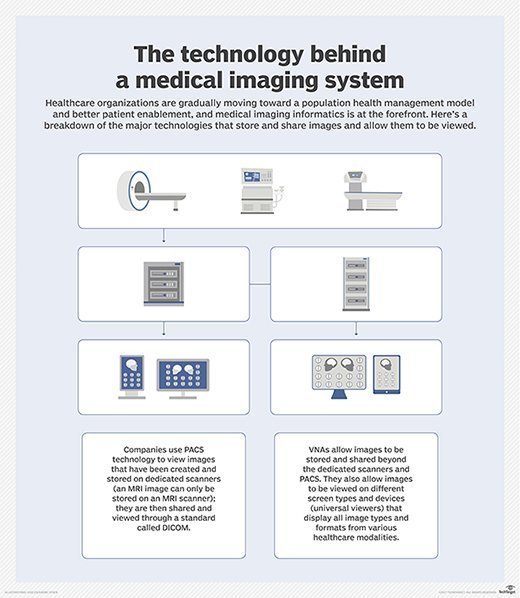

In the past, CCDs could deliver much better quality images than other types of sensors, including those based on CMOS technology. As a result, they were used in a wide range of devices, including scanners, bar code readers, microscopes, medical equipment and astronomical telescopes. The devices also found use in machine vision for robots, optical character recognition (OCR), processing satellite photographs and radar imagery, especially in meteorology.

In addition, CCDs were used in digital cameras to deliver better resolution than older technologies. By 2010, digital cameras could produce images with over one million pixels, yet they sold for under $1,000. The term megapixel was coined in reference to such cameras.

CCDs vs. CMOS sensors

Despite the early successes of CCDs, CMOS sensors have been gaining favor across the industry, and they're now used extensively in consumer products for capturing images. CMOS sensors are easier and cheaper to manufacturer than CCD sensors. They also use less energy and produce less heat.

Even so, CMOS sensors have a reputation for being more susceptible to image noise, which can impact quality and resolution. But their quality has improved significantly in recent years, and CMOS sensors now dominate the image sensor market.

Despite the proliferation of CMOS sensors, CCD sensors are still used for applications that demand precision and a high degree of sensitivity. For example, CCD sensors continue to be used in medical, scientific and industrial equipment. Even the Hubble Space Telescope sports a CCD sensor. But the momentum is clearly behind CMOS, and the future of CCD remains unclear.

Explore the use of AI in medical imaging and why sharing medical imaging data with AI apps raises concerns.